In the world of software development, containerization has emerged as a transformative technology, enhancing how applications are developed, deployed, and managed. This guide will introduce you to the basics of containers, their benefits, and the leading tools that make containerization a cornerstone of modern software practices.

What is a Container?

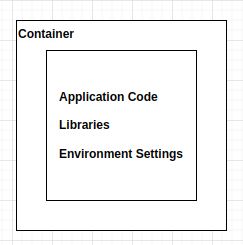

A container is a lightweight, stand-alone, executable package that includes everything needed to run a piece of software, including the code, runtime, libraries, and configuration files. Containers are isolated from one another and the host system, providing a consistent operational environment regardless of where they are deployed.

A container is like a little box that holds everything an application needs to run properly. This includes the application itself, along with any code, libraries, and settings it needs. The idea is that by packing everything together, the application will run the same way, no matter where you put it—whether on a developer's laptop, a testing environment, or a live server.

This container relies on the operating system of the host machine but remains separate from other containers and the host system itself. It acts like a tiny, self-contained computer inside a larger computer. This setup helps avoid problems where an application works on one person’s machine but not another's, because everything it needs is included in its container.

Benefits of Containerization

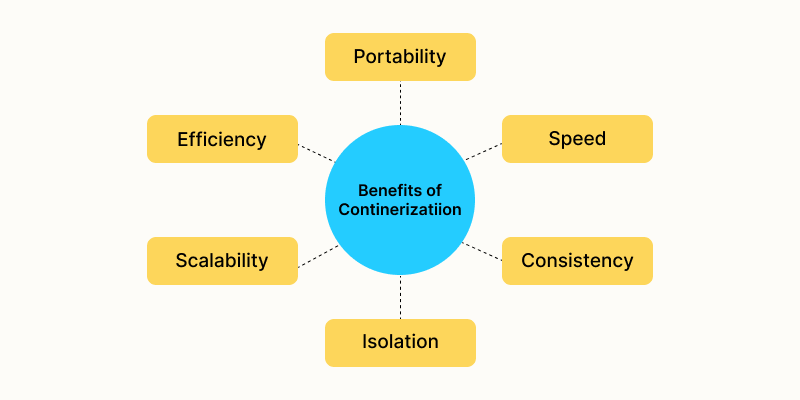

Containerization is a technology that helps developers package their applications along with all the necessary components such as libraries and other dependencies in a single package, known as a container. This method simplifies and speeds up the setup and delivery of software across different computing environments, from a developer’s local machine to a large-scale cloud setup.

1. Portability: Once you've packed an application and its requirements into a container, you can run it almost anywhere—different computers, servers, or cloud environments—without worrying about issues like incompatible software versions.

2. Efficiency: Containers use system resources such as CPU and memory more effectively. They start up faster than traditional virtual machines and use less disk space because they share parts of the operating system between them.

3. Scalability: Containers make it easy to scale applications up or down quickly. You can swiftly add more containers when demand is high and remove them when it's low, which is great for handling varying loads.

4. Isolation: Each container operates independently of others, which reduces the risk of one application impacting another. If one container fails, it doesn't affect the rest, making the whole system more stable and secure.

5. Consistency: Containers provide a consistent environment for applications from development through testing to production. This consistency eliminates the common problem of an application working on a developer's machine but not in production.

6. Speed: What makes a container faster than a VM is that by being isolated space environments executed in a single kernel, take fewer resources. Containers can run in seconds, while VMs need more time to start each one’s operating system.

Why are containers important?

Containers are crucial in modern software development and deployment because they simplify and streamline various processes. By packaging an application with all its necessary components, containers ensure that it works consistently across different computing environments. This uniformity eliminates the common issue where software runs on a developer's machine but fails in production.

Containers also boost efficiency by allowing developers to quickly set up and tear down environments, speeding up both development and testing cycles. Moreover, they use resources more efficiently than traditional virtual machines and provide better isolation and security for applications. This isolation means if one container is compromised, the problem is less likely to affect others.

Additionally, containers are easily scalable, making them ideal for businesses that experience fluctuating demands. Their flexibility and efficiency make containers a fundamental technology for companies aiming to maintain agility and competitiveness in the tech-driven market.

What are the top container images?

Top container images typically refer to the most commonly used base images that serve as the foundation for building containers. These images are often provided and maintained by open-source projects or companies and are popular due to their reliability, community support, and ease of use. Here are some of the most popular container images:

Alpine Linux: Known for its simplicity and small size, Alpine Linux is a popular choice for those looking to minimize their container's footprint. It's often used in situations where resources are limited or speed is a priority.

Ubuntu: One of the most popular Linux distributions, Ubuntu is favored for its ease of use and robust community support. It provides a well-tested and secure environment, making it a common choice for many developers.

CentOS: This is a community-supported distribution derived from sources freely provided to the public by Red Hat. CentOS is chosen for environments where stability and enterprise-readiness are paramount.

Debian: Known for its stability and security, Debian is another popular base image for containers. It's used in many server environments worldwide and supports a wide range of applications.

Fedora: Fedora is known for innovation and features the latest software and updates. Containers based on Fedora are a good choice for those who need the most current features.

Nginx: Often used as a web server or reverse proxy, the Nginx container image is lightweight and high-performance, suitable for hosting websites or serving as a frontend proxy.

Node.js: This image is tailored for running Node.js applications and is commonly used in web development environments.

MySQL and PostgreSQL: These images are widely used for database services. MySQL and PostgreSQL containers are popular for deploying database instances quickly and efficiently.

What are the primary tools for container technologies?

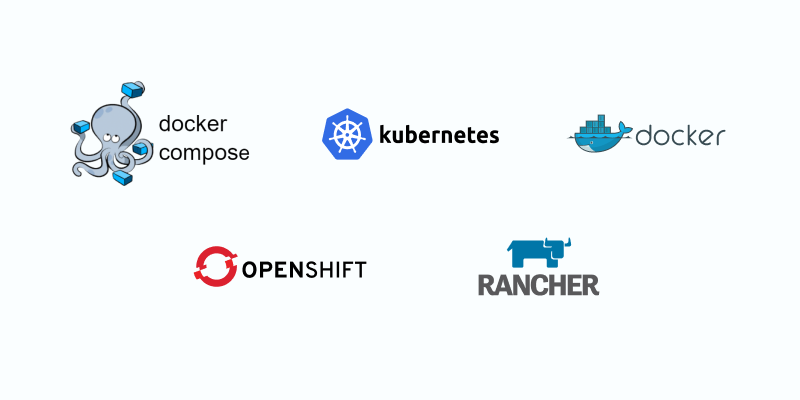

When it comes to container technologies, there are a few key tools that help manage and orchestrate containers effectively. These tools are essential for creating, running, and managing containers across different environments:

Docker: Docker is the most popular tool for creating and managing containers. It allows developers to package an application and its dependencies into a container, which can then be easily shared and run anywhere Docker is supported. Docker simplifies the setup and provides a consistent environment for applications.

Kubernetes: Once you have containers, you might need to manage many of them, especially in large-scale operations. Kubernetes is a powerful system designed for automating the deployment, scaling, and management of containerized applications. It helps organize containers into groups and manages them across multiple machines.

Docker Compose: This tool is used for defining and running multi-container Docker applications. With Docker Compose, you can configure all of your application’s services, networks, and volumes in a single file, then spin everything up with just one command. It’s particularly useful for development and testing environments.

Rancher: Rancher is an open-source platform that provides a complete container management solution. It simplifies the deployment of Kubernetes and manages Docker containers across multiple hosts, providing a more accessible interface for managing containers.

OpenShift: Developed by Red Hat, OpenShift is a cloud-based Kubernetes container platform that offers developer and operational centric tools. It supports applications built with Docker and Kubernetes and provides additional features such as source-to-image build, which automates the process of building Docker images.

Containers VS. Virtual Machines (VMs)

Recap

To sum up what we've discussed, containerization is a method that helps pack applications into portable units, making it easier and more flexible to develop software. This approach is a great alternative to traditional, more cumbersome and expensive development methods.

The main point to remember is that while the specific advantages of using containerization can differ depending on the organization, anyone using this technology can expect a smoother and simpler way to develop applications. This makes it an appealing choice for many developers and companies looking to enhance their software development process.