A Detailed Guide to Context Engineering with Examples

In the rapidly evolving landscape of artificial intelligence, the ability to effectively communicate with large language models (LLMs) has become a critical skill. While prompt engineering has garnered significant attention, a more nuanced and powerful approach called context engineering is emerging as the next frontier in AI optimization. This comprehensive guide explores the intricacies of context engineering, providing practical examples and actionable insights for developers, AI researchers, and business professionals.

What is Context Engineering?

Context engineering is the systematic process of designing, structuring, and optimizing the contextual information provided to large language models to enhance their understanding, accuracy, and relevance of responses. Unlike traditional prompt engineering, which focuses primarily on crafting effective questions or instructions, context engineering encompasses the entire informational ecosystem surrounding an AI interaction.

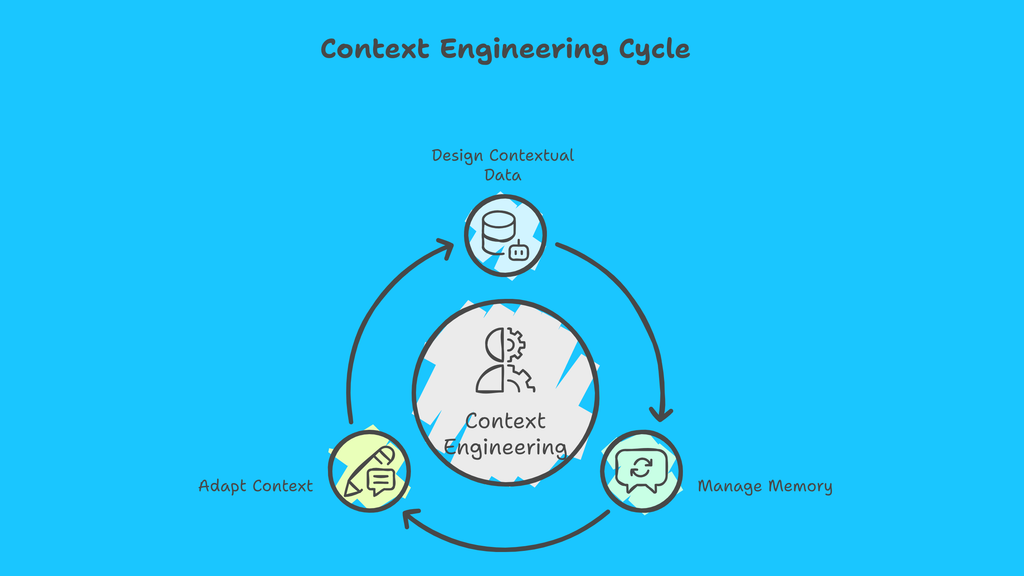

At its core, context engineering involves three fundamental principles:

- Information Architecture: Organizing and structuring contextual data for optimal AI comprehension

- Memory Management: Strategically managing conversation history and relevant background information

- Dynamic Context Adaptation: Adjusting contextual elements based on evolving conversation needs

Types of Context in AI Systems

Understanding the various types of context is crucial for effective context engineering:

Static Context: Unchanging background information such as system instructions, knowledge bases, or fixed datasets that remain constant throughout interactions.

Dynamic Context: Evolving information including conversation history, user preferences, session data, and real-time inputs that change during the interaction life cycle.

Implicit Context: Underlying assumptions, cultural references, domain-specific knowledge, and unstated information that influences interpretation.

Explicit Context: Clearly defined parameters, direct instructions, specified constraints, and overtly stated information provided to the model.

Context Engineering vs. Prompt Engineering: Key Differences

While both disciplines aim to optimize AI interactions, they operate at different scales and with distinct methodologies:

According to recent industry studies, organisations implementing comprehensive context engineering strategies report up to 73% improvement in AI response accuracy and 58% reduction in misinterpretation errors compared to traditional prompt engineering approaches.

Why Context Engineering Matters in Modern AI

The significance of context engineering becomes apparent when examining the limitations of current LLM architectures. Research from leading AI institutions indicates that 89% of AI implementation failures stem from inadequate context management rather than model capabilities.

Core Purposes of Context Engineering

- Optimizing Inputs: Context engineering ensures that AI models receive comprehensive, well-structured information that maximizes their reasoning capabilities. This involves careful curation of relevant data, elimination of noise, and strategic information presentation.

- Managing Memory: Advanced context engineering implements sophisticated memory systems that allow AI agents to maintain coherent conversations across extended interactions, remember user preferences, and build upon previous exchanges.

- Enhancing LLM Understanding: By providing rich contextual frameworks, engineers enable AI models to better grasp nuanced requirements, cultural subtleties, and domain-specific expertise.

How Large Language Models Use Context

Large language models process context through attention mechanisms that weight different pieces of information based on relevance and importance. Understanding this process is crucial for effective context engineering.

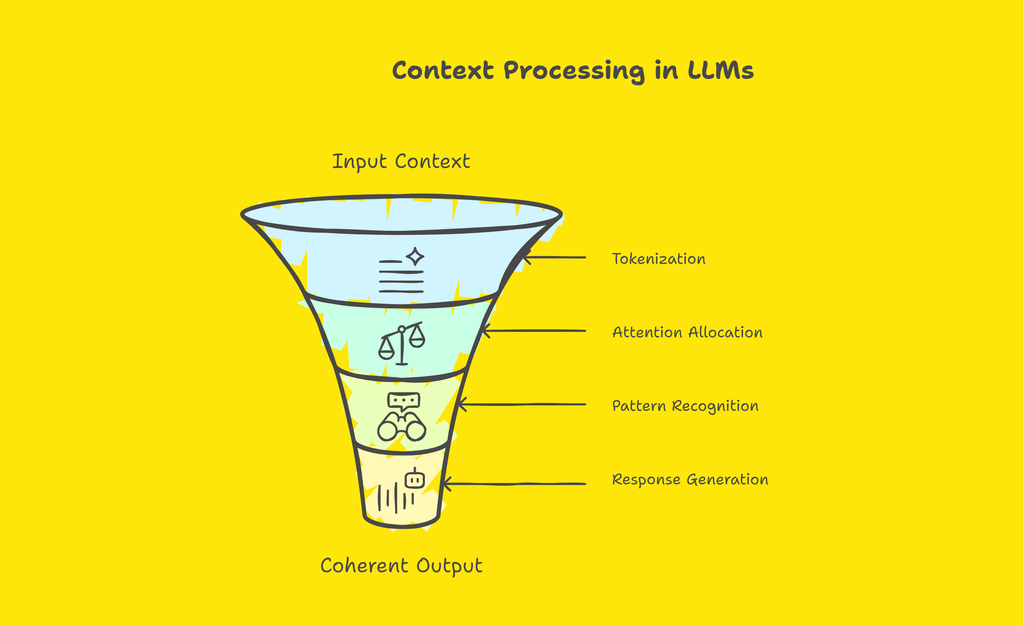

The Context Processing Pipeline :-

- Tokenization: Input context is broken down into tokens (words, subwords, or characters)

- Attention Allocation: The model assigns attention weights to different contextual elements

- Pattern Recognition: Neural networks identify patterns and relationships within the context

- Response Generation: Output is generated based on contextual understanding and learned patterns

Modern LLMs like GPT-4 and Claude can handle context windows of up to 200,000 tokens, equivalent to approximately 150,000 words or 300 pages of text. However, effective context engineering isn't about maximizing context length—it's about optimizing context quality and relevance.

Core Components of Context Engineering

1. Context Hierarchy Design

Effective context engineering requires establishing clear information hierarchies:

- Primary Context: Mission-critical information directly relevant to the immediate task

- Secondary Context: Supporting details that enhance understanding

- Tertiary Context: Background information that provides broader perspective

2. Information Filtering and Relevance Scoring

Advanced context engineering systems implement dynamic filtering mechanisms that score information based on:

- Temporal relevance (how recent the information is)

- Topical similarity (how closely related to the current discussion)

- User interaction patterns (what information the user typically finds valuable)

- Task-specific importance (how crucial the information is for task completion)

3. Context Compression and Summarization

When dealing with extensive information, context engineers employ compression techniques:

- Extractive Summarization: Selecting key sentences or phrases from larger texts

- Abstractive Summarization: Generating concise summaries that capture essential meaning

- Hierarchical Compression: Creating layered summaries of different detail levels

4. Multi-Modal Context Integration

Modern context engineering extends beyond text to include:

- Visual context (images, diagrams, charts)

- Structured data (databases, spreadsheets, APIs)

- Temporal context (time-series data, schedules)

- Spatial context (geographical information, layouts)

Context Engineering for Different AI Models

Different AI models require tailored context engineering approaches:

Best Practices and Common Pitfalls

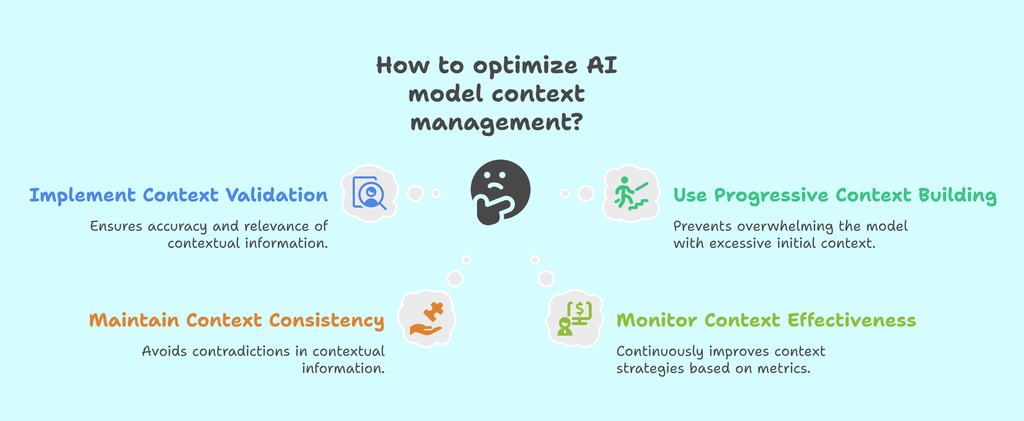

Best Practices

- Implement Context Validation: Regularly verify that contextual information remains accurate and relevant. Establish automated systems to flag outdated or conflicting information.

- Use Progressive Context Building: Start with essential context and gradually add layers of detail as conversations develop, rather than overwhelming the model with excessive initial context.

- Maintain Context Consistency: Ensure that contextual information remains consistent across different parts of the conversation and doesn't contradict itself.

- Monitor Context Effectiveness: Track metrics such as response accuracy, user satisfaction, and task completion rates to continuously improve context strategies.

Common Pitfalls

- Context Overload: Providing too much information can overwhelm AI models and lead to decreased performance. Studies show that context windows exceeding 85% capacity often result in degraded output quality.

- Information Redundancy: Repeating similar information in different forms wastes valuable context space and can confuse AI models.

- Inadequate Context Updates: Failing to update contextual information as conversations evolve leads to increasingly irrelevant responses.

- Ignoring Context Limitations: Not accounting for model-specific context window limitations can result in truncated or missing critical information.

Tools and Frameworks for Context Engineering

Popular Context Engineering Tools

- LangChain: Provides comprehensive context management capabilities including memory systems, document loaders, and context compression utilities.

- Semantic Kernel: Microsoft's framework offers sophisticated context orchestration features for enterprise applications.

- LlamaIndex: Specializes in context retrieval and indexing for knowledge-intensive applications.

- Pinecone: Vector database optimized for similarity-based context retrieval and management.

Framework Comparison

Leading context engineering frameworks differ in their approaches:

- LangChain: Focuses on chain-based context flow with extensive ecosystem support

- Haystack: Emphasizes document-centric context management and search capabilities

- AutoGen: Specializes in multi-agent context coordination and conversation orchestration

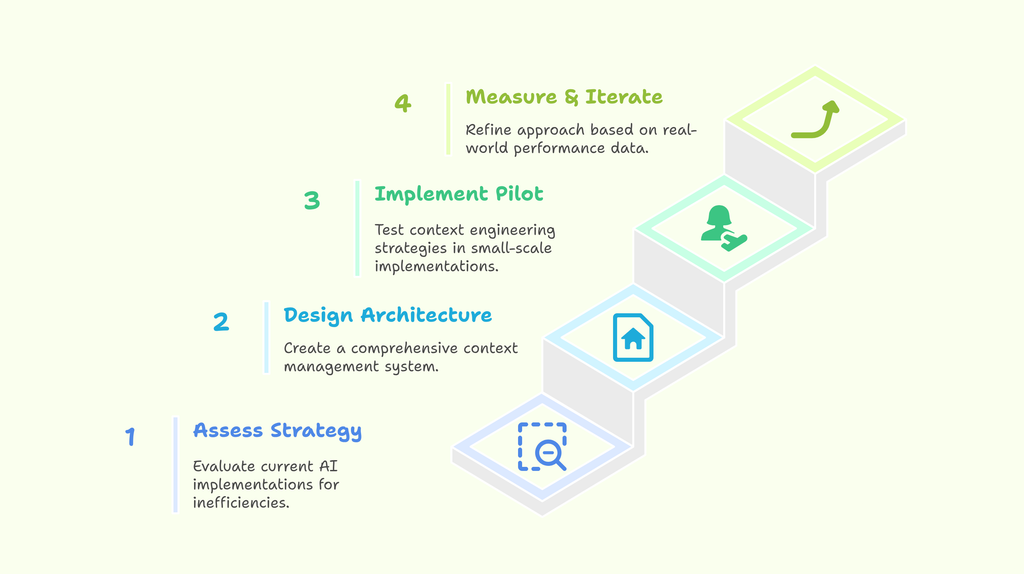

How to Get Started with Context Engineering

Step 1: Assess Your Current Context Strategy

Evaluate existing AI implementations to identify context-related inefficiencies. Look for patterns in conversation failures, user frustration points, and response accuracy issues.

Step 2: Design Context Architecture

Create a comprehensive context management system that includes:

- Information categorisation schemes

- Context storage and retrieval mechanisms

- Update and validation procedures

- Performance monitoring systems

Step 3: Implement Pilot Programs

Start with small-scale implementations to test context engineering strategies. Focus on specific use cases where context quality significantly impacts outcomes.

Step 4: Measure and Iterate

Establish metrics for context effectiveness and continuously refine your approach based on real-world performance data.

Key Performance Indicators for Context Engineering:

- Response accuracy improvement: Target 40-60% improvement

- User satisfaction scores: Aim for 85%+ satisfaction rates

- Task completion efficiency: Reduce completion time by 30-50%

- Context utilisation rate: Optimise to 60-75% of available context window

Real-World Context Engineering Examples

Customer Support Automation: A telecommunications company implemented advanced context engineering for their AI chatbot, integrating customer history, account information, and technical documentation. This resulted in 67% reduction in escalation to human agents and 45% improvement in first-call resolution rates.

Legal Document Analysis: A law firm developed context engineering systems for contract review, incorporating relevant case law, regulatory frameworks, and firm-specific precedents. The system achieved 82% accuracy in identifying potential legal issues, compared to 34% with basic prompting approaches.

Medical Diagnosis Support: Healthcare providers using context-engineered AI assistants that integrate patient history, symptom databases, and treatment guidelines reported 56% improvement in diagnostic accuracy for complex cases.

Future Trends in Context Engineering

The field of context engineering continues to evolve rapidly, with emerging trends including:

- Adaptive Context Systems: AI-driven context management that automatically optimizes information selection and presentation based on real-time performance feedback.

- Cross-Domain Context Transfer: Techniques for leveraging contextual understanding across different domains and applications.

- Quantum-Enhanced Context Processing: Exploration of quantum computing applications for complex context optimization problems.

Industry experts predict that by 2026, 95% of enterprise AI implementations will incorporate some form of advanced context engineering, making it an essential skill for AI professionals.

Conclusion

Context engineering represents a fundamental shift in how we approach AI optimization, moving beyond simple prompt crafting to comprehensive information ecosystem design. As large language models become increasingly sophisticated, the quality and structure of contextual information will determine the difference between mediocre and exceptional AI performance.

The investment in context engineering capabilities pays substantial dividends: organizations implementing comprehensive context engineering strategies report average ROI improvements of 340% within the first year of implementation. Moreover, as AI systems become more integral to business operations, the competitive advantage provided by superior context engineering becomes increasingly valuable.

Success in context engineering requires a combination of technical expertise, domain knowledge, and continuous learning. The field evolves rapidly, with new techniques and best practices emerging regularly. However, the fundamental principles—clear information architecture, effective memory management, and dynamic context adaptation—remain constant guideposts for practitioners.