What is Neuromorphic Computing? A Detailed Guide

Introduction: What is Neuromorphic Computing?

The term neuromorphic refers to computing architectures modeled after the brain. Unlike the von Neumann architecture, which separates memory and computation and processes tasks sequentially, neuromorphic systems are designed to work in parallel, just like neurons and synapses in biological systems.

At its core, neuromorphic computing is about replicating how biological brains process information. Traditional von Neumann architectures suffer from the “memory wall,” where transferring data between memory and processors creates a bottleneck. Neuromorphic systems integrate memory and computation, allowing for event-driven, parallel, and energy-efficient processing.

Neuromorphic technology is not merely about mimicking biology-it’s about leveraging nature’s design principles to solve computational challenges that conventional machines struggle with. Problems requiring real-time adaptability, ultra-low energy use, and learning-on-the-fly are prime candidates for neuromorphic approaches.

History of Neuromorphic Computing

The concept of neuromorphic computing dates back to the 1980s when Carver Mead, an electrical engineer and computer scientist at Caltech, coined the term “neuromorphic engineering.” Mead envisioned circuits that emulated the functionality of neurons and synapses, leveraging analog VLSI (Very-Large-Scale Integration) design.

Timeline of Key Developments:

1980s: Early experiments in analog VLSI circuits modeled neural dynamics, paving the way for brain-inspired electronics.

1990s: Growth of neural network research and spiking neural networks (SNNs), which transmit signals using discrete spikes rather than continuous signals.

2000s: Progress in semiconductor design enabled larger, more sophisticated neuromorphic chips. Research shifted from purely academic experiments to practical architectures.

2010s: Industry participation accelerated. IBM’s TrueNorth and Intel’s Loihi became landmarks in neuromorphic processors, showing how millions of neurons could be simulated on hardware with extreme power efficiency.

2020s: Neuromorphic computing became an interdisciplinary field, merging neuroscience, AI, robotics, and material sciences. Universities (Manchester’s SpiNNaker, Heidelberg’s BrainScaleS) and tech giants (Intel, IBM, Qualcomm) are now driving innovation in neuromorphic architectures.

Neuromorphic computing has thus evolved from theory to hardware reality, with its potential now extending to real-world applications such as healthcare, robotics, and autonomous systems.

How Does Neuromorphic Computing Work?

Neuromorphic computing relies on specialized hardware known as neuromorphic processors or neuromorphic chips, which are designed to simulate the behavior of neurons and synapses.

Key Components of Neuromorphic Architecture:

- Neurons: Computational units that receive, integrate, and transmit signals.

- Synapses: Connections between neurons where signals are weighted and transmitted. In neuromorphic chips, these are implemented with electronic circuits or emerging materials like memristors.

- Spikes (Event-Driven Processing): Neuromorphic systems transmit data through spikes (discrete electrical pulses) rather than continuous signals, mirroring biological neurons.

- Parallel Processing: Neuromorphic chips can support millions of neurons and synapses operating simultaneously, enabling extreme parallelism.

Learning Mechanisms:

Neuromorphic processors use brain-inspired learning techniques:

- Spike-Timing-Dependent Plasticity (STDP): Strengthens or weakens synaptic weights based on the timing of spikes.

- Hebbian Learning: “Neurons that fire together, wire together,” mimicking biological learning patterns.

- On-Chip Learning: Unlike traditional AI, where models are trained on external GPUs, neuromorphic systems can learn locally and adapt in real time.

Neuromorphic Chips in Action:

- IBM TrueNorth: A chip with 1 million neurons and 256 million synapses, optimized for image and pattern recognition.

- Intel Loihi: Supports on-chip learning with real-time adaptability and has been used in robotics and smell recognition tasks.

- SpiNNaker (University of Manchester): Uses 1 million ARM cores to simulate biological spiking networks.

- BrainScaleS (Heidelberg University): Uses analog circuits to emulate brain activity at faster-than-biological speeds.

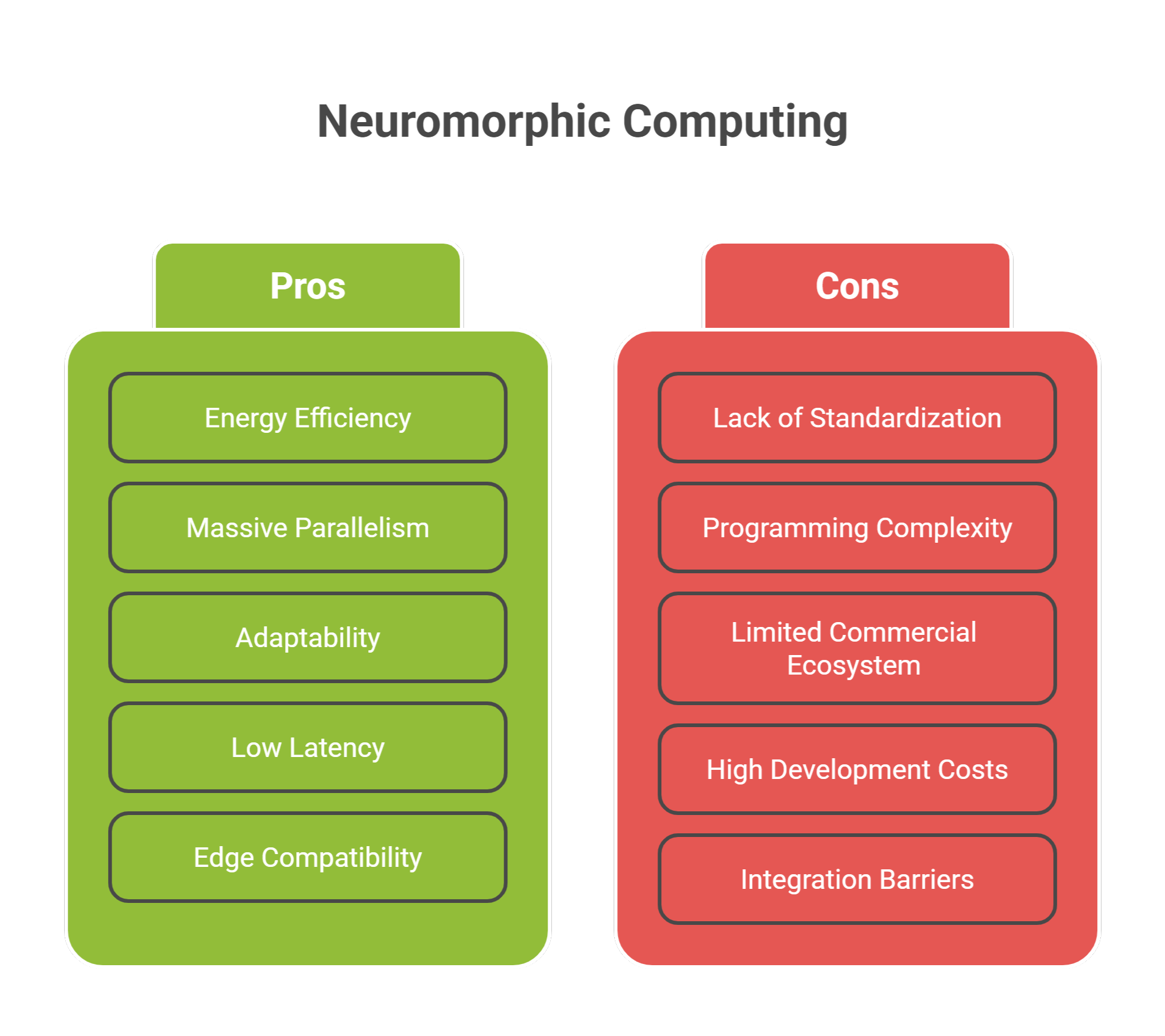

Advantages of Neuromorphic Computing

Neuromorphic computing has several clear advantages:

- Energy Efficiency: Mimics the human brain, which consumes only about 20 watts to perform complex tasks. Neuromorphic processors can run large models with drastically reduced energy costs.

- Massive Parallelism: Billions of neurons in the brain process signals simultaneously. Neuromorphic chips bring this parallelism to hardware.

- Adaptability: These systems can adapt to new information dynamically without requiring retraining from scratch.

- Low Latency: Event-driven spikes ensure computations only happen when necessary, improving responsiveness.

- Edge Compatibility: Neuromorphic AI is ideal for edge devices where energy efficiency and real-time decision-making are critical.

Disadvantages of Neuromorphic Computing

Despite its promise, neuromorphic computing faces hurdles:

- Lack of Standardization: Competing chip designs and programming models limit interoperability.

- Programming Complexity: Neuromorphic hardware requires new languages, tools, and paradigms, unlike traditional Python or TensorFlow-based workflows.

- Limited Commercial Ecosystem: While research is thriving, there are fewer commercial-ready products.

- High Development Costs: Building neuromorphic chips and architectures is expensive and resource-intensive.

- Integration Barriers: Existing infrastructure is heavily optimized for von Neumann architectures, making hybrid deployment difficult.

Applications of Neuromorphic Computing

Neuromorphic computing has vast potential applications across industries. Here are some of the most promising domains:

1. Artificial Intelligence (AI) and Machine Learning

Neuromorphic processors can execute real-time AI workloads while consuming minimal energy. Unlike GPUs, which require high power, neuromorphic AI can run efficiently on edge devices.

Example: Loihi demonstrated real-time adaptive learning in reinforcement learning environments, outperforming traditional architectures in efficiency.

2. Robotics and Autonomous Systems

Robotics relies on real-time sensory input and quick decision-making. Neuromorphic systems enable robots to process data from cameras, sensors, and microphones at low latency.

Applications: Self-driving cars, drones, humanoid robots, and manufacturing automation.

3. Healthcare and Brain-Machine Interfaces

Neuromorphic computing is revolutionizing healthcare technologies: - Neural prosthetics: Chips can directly interface with the human nervous system for advanced prosthetic control. - Medical imaging: Faster and more energy-efficient image recognition. - Neurological treatment: Potential use in diagnosing and treating brain disorders like epilepsy.

Example: Neuromorphic chips enabling prosthetic limbs to respond to neural signals almost instantly.

4. Edge Computing and IoT Devices

Internet of Things (IoT) devices often have strict power and size constraints. Neuromorphic processors enable AI on the edge without cloud dependency.

Example: Smart home assistants, wearable devices, and smart security cameras with built-in neuromorphic processors.

5. Cybersecurity

Real-time pattern recognition enables neuromorphic AI to detect anomalies, malware, or intrusions.

Example: Adaptive intrusion detection systems that improve over time without retraining.

6. Environmental Monitoring and Agriculture

Low-power neuromorphic sensors can continuously monitor the environment.

- Detect seismic activity in remote regions.

- Monitor crop health and soil quality

- Detect climate change using distributed sensor networks

7. Financial Services

Financial institutions can leverage neuromorphic AI for fraud detection, stock market predictions, and risk analysis with minimal power requirements.

Example: Neuromorphic systems detecting unusual transaction patterns in real time.

8. Military and Defense Applications

Defense systems require low-latency, high-reliability computation in remote environments. Neuromorphic computing can power autonomous drones, surveillance systems, and secure communications.

The Future of Neuromorphic Computing

The future of neuromorphic technology holds immense promise. Some expected trends include:

Integration with Mainstream AI: Neuromorphic processors may complement or replace GPUs for specialized AI tasks.

Scalable Architectures: Future chips may simulate billions of neurons, rivaling the human brain’s complexity.

Hybrid Architectures: Combining neuromorphic, quantum, and classical computing for next-gen supercomputers.

Commercial Adoption: Industries like healthcare, automotive, finance, and IoT may see neuromorphic systems in production.

Ethical Implications: As machines become more brain-like, ethical considerations around autonomy, privacy, and decision-making will intensify.

Global efforts from IBM, Intel, Qualcomm, and academic institutions ensure that neuromorphic computing will continue to grow. Governments are also funding neuromorphic AI research for national security and economic competitiveness.

Conclusion

Neuromorphic computing represents a paradigm shift in computing, offering the possibility of machines that think, learn, and adapt more like humans. By mimicking the human brain’s structure, neuromorphic processors promise breakthroughs in AI, robotics, healthcare, and edge computing.

While challenges remain such as cost, complexity, and ecosystem development—the momentum behind neuromorphic architecture is undeniable. As this field matures, neuromorphic technology could power the next era of intelligent systems, enabling computation that is not only faster and more efficient but also adaptive and human-like.

The journey of neuromorphic computing is still unfolding, but it may well define the future of AI and computing as we know it.