Generative AI Design Patterns & Architecture

Generative AI (GenAI) has moved beyond hype to become a strategic advantage for businesses. From content creation and customer engagement to code generation and predictive analytics, its capabilities continue to expand.

However, few fully understand the complex architecture that powers GenAI solutions. For companies aiming to build or integrate GenAI applications, it is crucial to grasp what goes into a reliable, scalable, and business-aligned GenAI architecture.

In this article, we provide an in-depth look at how GenAI architecture is structured, how it supports various use cases, and what practical considerations enterprises should factor into their GenAI initiatives.

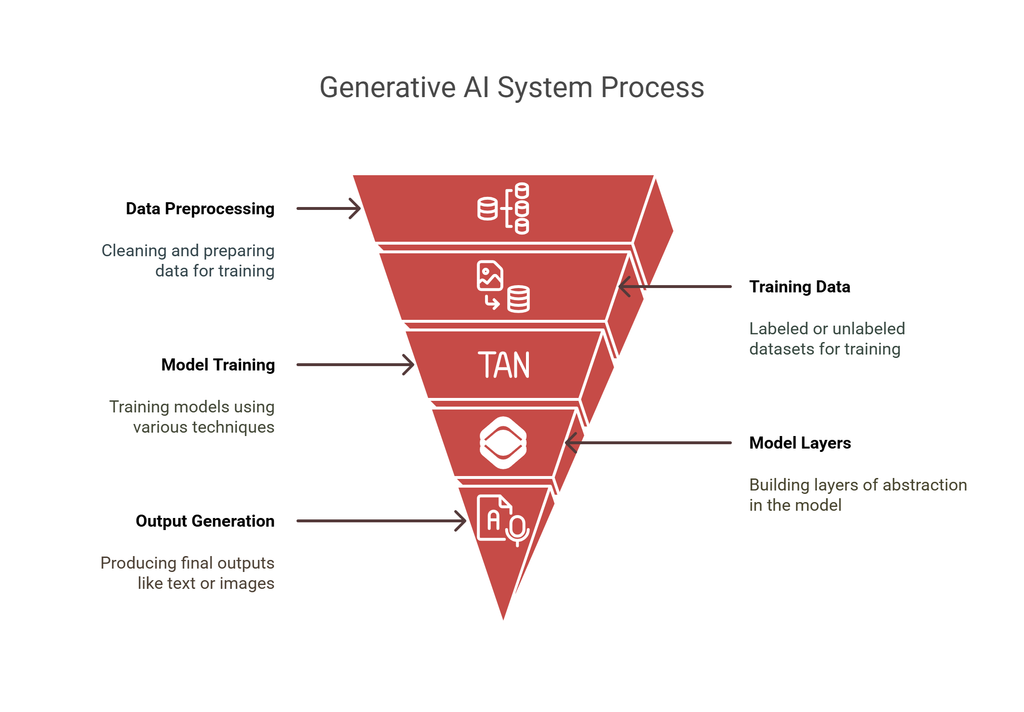

Understanding Generative AI Architecture

Core Components

Foundation models such as OpenAI's GPT-4, Google's PaLM, Anthropic's Claude, and Meta's LLaMA represent the computational core of GenAI.

These models are trained on diverse and massive datasets with billions (or even trillions) of parameters, enabling them to generate highly coherent and relevant text, images, audio, or code.

.png)

Model Layer

Foundation Models

- Large Language Models (LLMs) like GPT-4, Claude, or PaLM

- Pre-trained models that provide base capabilities

- Configuration options for model parameters and deployment

- Version management and model lifecycle handling

Fine-tuned Models

- Domain-specific adaptations of foundation models

- Training data management and versioning

- Fine-tuning pipeline architecture

- Model evaluation and validation frameworks

- Performance monitoring and metrics collection

Domain-specific Models

- Purpose-built models for specific tasks

- Integration patterns with foundation models

- Specialized architectures for domain requirements

- Custom evaluation metrics and benchmarks

Key Characteristics:

Pretrained on a broad corpus of web data, books, scientific literature, and code repositories.

Designed to be fine-tuned or adapted for downstream tasks.

Require immense GPU/TPU compute and storage.

Why It Matters

- A single foundation model like GPT-4 can be used to solve various problems with minimal fine-tuning.

- It also allows faster prototyping across content, design, and logic-heavy functions such as legal documentation or software development.

Quantitative Insight: Enterprises fine-tuning foundation models for niche applications (e.g., healthcare or finance) report an average 30-50% performance increase in domain-specific accuracy.

Here is a list of the top 20 app development agencies specializing in health-tech project development.

Data Pipelines

Even the best model underperforms without clean, relevant data. GenAI applications rely on robust data pipelines that feed the model both during training and inference.

Key Pipeline Components:

- Data ingestion: From APIs, CRMs, databases, cloud storage

- Preprocessing: Tokenization, deduplication, noise reduction

- Governance: Filtering biased, outdated, or unsafe content

- Storage: Efficient schema in data lakes or warehouses

Best Practices:

- Automate data refresh cycles to avoid stale input.

- Use labeled datasets wherever possible to steer output behavior.

Quantitative Insight: Optimized data pipelines contribute up to 25% improvement in downstream model accuracy and response consistency

Model Fine-Tuning and Prompt Engineering

Once the base model is chosen and data pipelines are in place, the next strategic decision is how to tailor the model to business-specific needs.

This can be achieved either through fine-tuning or prompt engineering, depending on the use case, budget, and required performance.

Fine-Tuning

- Involves training the foundation model further on labeled or unlabeled data specific to your domain.

- Enables the model to understand niche vocabulary, follow internal business logic, and generate highly relevant outputs.

- Recommended for high-stakes scenarios such as healthcare, legal compliance, or financial analysis.

Prompt Engineering:

- A more lightweight and cost-effective alternative that uses structured prompts to guide the model’s output.

- No retraining is required; instead, it relies on crafting optimized prompts that contain context, variables, and intent.

- Ideal for rapid prototyping, MVPs, and scalable deployments without massive computational costs.

Choosing Between Them

- Use fine-tuning when accuracy, compliance, or domain specificity is non-negotiable.

- Use prompt engineering for agility, lower cost, and faster iteration cycles.

Prompt Engineering Patterns to Maximize Effectiveness:

- To scale and manage prompt-based systems efficiently, a structured prompt engineering pattern should be adopted. Key components include

Prompt Templates

- Standardized formats for different business intents

- Variable substitution mechanisms (e.g., customer names, product types)

- Versioning system to track changes

- Conditional logic to adapt prompts dynamically

- Validation rules to prevent malformed prompts

- Documentation for template usage and modification

Context Windows

- Optimization of token usage to stay within model limits

- Prioritization of key content (e.g., user intent over metadata)

- Budget management for token-intensive prompts

- Techniques like content compression and summarization

- Sliding window approaches for conversational memory

- Long-term memory strategies across sessions

Dynamic Variable Injection

- Runtime variable validation and type checking

- Default value support for missing data

- Error-handling logic for failed injections

- Scope control to prevent prompt pollution

- Sanitization of user inputs to avoid prompt injections

Prompt Versioning

- Git-style version control or prompt registries

- Rollback systems to revert to stable prompts

- A/B testing support to evaluate prompt changes

- Performance metrics logging

- Compatibility checks for downstream applications or APIs

- Change tracking to monitor performance shifts

class PromptTemplate:

def __init__(self, template: str, version: str = "1.0"):

self.template = template

self.version = version

self.variables = self._extract_variables()

self.validation_rules = {}

self.metadata = {

"created_at": datetime.now(),

"last_modified": datetime.now(),

"version_history": []

}

def format(self, **kwargs):

"""

Format the template with provided variables while applying validation rules

"""

self._validate_variables(kwargs)

formatted_prompt = self.template.format(**kwargs)

return self._apply_post_processing(formatted_prompt)

def _extract_variables(self):

"""

Extract and analyze variables from the template

"""

variables = set()

# Complex variable extraction logic

pattern = r'\{([^}]+)\}'

matches = re.finditer(pattern, self.template)

for match in matches:

variables.add(match.group(1))

return variables

def _validate_variables(self, kwargs):

"""

Validate provided variables against defined rules

"""

for var_name, value in kwargs.items():

if var_name not in self.variables:

raise ValueError(f"Unexpected variable: {var_name}")

if var_name in self.validation_rules:

self._apply_validation_rule(var_name, value)

def add_validation_rule(self, variable: str, rule: Callable):

"""

Add a validation rule for a specific variable

"""

if variable not in self.variables:

raise ValueError(f"Variable {variable} not found in template")

self.validation_rules[variable] = ruleDecision Guidance:

Quantitative Insight: Well-structured prompts using the above patterns have been shown to achieve 70-85% of the accuracy of fine-tuned models, often at less than one-tenth the cost.

This makes them valuable for customer support automation, content generation at scale, and internal tooling, especially when built using AI-friendly programming languages optimized for generative model development.

2. Retrieval-Augmented Generation (RAG) Pattern

RAG has become a cornerstone pattern for enhancing generative AI systems with external knowledge.

Instead of relying solely on pre-trained model data, RAG dynamically retrieves relevant context from external sources (like internal documents or databases) to feed into the prompt during inference.

This approach solves the problem of hallucinations and outdated knowledge, especially when deploying LLMs in enterprise workflows involving real-time data, compliance documents, or product catalogs.

Use Case Example: In an enterprise knowledge assistant, RAG can pull content from the company’s Confluence pages, policy documents, or help center articles and use that as grounding context for each query, improving answer precision and minimizing legal/compliance risks.

RAG Architecture Components

To implement RAG effectively, the following architectural elements must be carefully designed and orchestrated:

Document Processing Pipeline

- Document Parsing & Extraction: Ingest documents from various formats (PDF, HTML, etc.) and extract usable text.

- Text Cleaning & Normalization: Remove noise, fix encoding issues, and normalize punctuation, casing, etc.

- Metadata Extraction: Identify key attributes like document type, author, creation date, or category.

- Chunking Strategies: Split documents into semantically coherent sections for better retrieval (e.g., paragraphs, headings).

- Update Mechanisms: Trigger re-indexing upon document updates.

- Version Control: Maintain document history for compliance or rollback needs.

Vector Database

- Embedding Storage Optimization: Efficiently store vector representations of document chunks.

- Index Management: Use algorithms like HNSW or FAISS for fast similarity search.

- Query Optimization: Tune the embedding match threshold and result limits.

- Scaling Strategies: Partition data and use sharding or replication to scale reads/writes.

- Backup & Recovery: Ensure persistence and data recovery in case of failures.

- Performance Monitoring: Track query latency, indexing time, and embedding health.

Context Integration

- Context Relevance Scoring: Score retrieved chunks on how relevant they are to the query.

- Integration Strategies: Merge retrieved content into the model input prompt intelligently (e.g., structured format, quoted references).

- Context Window Management: Fit within model token limits using trimming or compression.

- Priority Handling: Give preference to certain document types or sources.

- Context Merging: Combine semantically overlapping results into unified chunks.

class RAGSystem:

def __init__(self,

vector_store: VectorStore,

document_processor: DocumentProcessor,

embedding_model: EmbeddingModel,

llm: LanguageModel):

self.vector_store = vector_store

self.document_processor = document_processor

self.embedding_model = embedding_model

self.llm = llm

self.config = self._load_config()

async def process_document(self, document: Document) -> None:

"""

Process and store a document in the RAG system

"""

# Document processing pipeline

chunks = self.document_processor.chunk(document)

embeddings = [

await self.embedding_model.embed(chunk)

for chunk in chunks

]

# Store in vector database

await self.vector_store.store(

document_id=document.id,

chunks=chunks,

embeddings=embeddings,

metadata=document.metadata

)

async def generate_response(self,

query: str,

num_contexts: int = 3) -> str:

"""

Generate a response using RAG pattern

"""

# Generate query embedding

query_embedding = await self.embedding_model.embed(query)

# Retrieve relevant contexts

contexts = await self.vector_store.search(

embedding=query_embedding,

limit=num_contexts

)

# Prepare prompt with contexts

prompt = self._prepare_prompt(query, contexts)

# Generate response

response = await self.llm.generate(prompt)

return response

def _prepare_prompt(self,

query: str,

contexts: List[Document]) -> str:

"""

Prepare prompt with retrieved contexts

"""

context_text = "\n".join(

f"Context {i+1}:\n{context.text}"

for i, context in enumerate(contexts)

)

return f"""

Use the following contexts to answer the question.

{context_text}

Question: {query}

Answer:"""Best Practices for Implementation

1. Error Handling

Implementing robust error handling is crucial for AI systems. Here's a comprehensive approach:

from enum import Enum

from typing import Optional, Dict, Any

from datetime import datetime

class ErrorSeverity(Enum):

LOW = "low"

MEDIUM = "medium"

HIGH = "high"

CRITICAL = "critical"

class ErrorCategory(Enum):

MODEL = "model"

INFRASTRUCTURE = "infrastructure"

INPUT = "input"

SECURITY = "security"

BUSINESS_LOGIC = "business_logic"

class AIServiceError(Exception):

def __init__(self,

error_type: ErrorCategory,

message: str,

severity: ErrorSeverity,

retry_allowed: bool = True,

context: Optional[Dict[str, Any]] = None):

self.error_type = error_type

self.message = message

self.severity = severity

self.retry_allowed = retry_allowed

self.context = context or {}

self.timestamp = datetime.utcnow()

self.error_id = self._generate_error_id()

super().__init__(self.message)

def _generate_error_id(self) -> str:

"""Generate unique error ID for tracking"""

return f"{self.error_type.value}-{uuid.uuid4()}"

def to_dict(self) -> Dict[str, Any]:

"""Convert error to dictionary for logging"""

return {

"error_id": self.error_id,

"type": self.error_type.value,

"message": self.message,

"severity": self.severity.value,

"retry_allowed": self.retry_allowed,

"context": self.context,

"timestamp": self.timestamp.isoformat()

}

class AIErrorHandler:

def __init__(self,

max_retries: int = 3,

error_logger: ErrorLogger,

notification_service: NotificationService):

self.max_retries = max_retries

self.error_logger = error_logger

self.notification_service = notification_service

self.retry_strategies = self._initialize_retry_strategies()

async def handle_error(self,

error: AIServiceError,

context: Dict[str, Any] = None) -> Any:

"""

Handle AI service errors with appropriate strategies

"""

# Log error

await self.error_logger.log_error(error)

# Notify if critical

if error.severity == ErrorSeverity.CRITICAL:

await self.notification_service.notify_team(error)

# Attempt retry if allowed

if error.retry_allowed:

return await self._attempt_retry(error, context)

# Return fallback response if no retry possible

return await self._fallback_response(error, context)

async def _attempt_retry(self,

error: AIServiceError,

context: Dict[str, Any]) -> Any:

"""

Attempt to retry the failed operation

"""

retry_strategy = self.retry_strategies.get(

error.error_type,

self.retry_strategies['default']

)

return await retry_strategy.execute(error, context)

async def _fallback_response(self,

error: AIServiceError,

context: Dict[str, Any]) -> Any:

"""

Provide appropriate fallback response

"""

fallback_strategy = self.fallback_strategies.get(

error.error_type,

self.fallback_strategies['default']

)

return await fallback_strategy.execute(error, context)Monitoring, Feedback Loops, and Observability

A GenAI system is not “set and forget.” Post-deployment observability is key for safety, user trust, and operational resilience.

Metrics to Track:

- Accuracy (BLEU, F1, task completion)

- Latency and uptime

- Prompt injection and hallucination rates

- User satisfaction (CSAT, feedback forms)

Tooling Stack:

- Logging & alerts: Prometheus, Grafana, ELK

- App monitoring: Sentry, DataDog

- Feedback loops: Human-in-the-loop (HITL), RLHF

Quantitative Insight: Enterprises that invest in active monitoring and feedback loops see a 40%+ reduction in hallucination and critical error rates within three months.

class AIMonitoring:

def __init__(self,

metrics_client,

tracing_client,

log_client):

self.metrics_client = metrics_client

self.tracing_client = tracing_client

self.log_client = log_client

self.performance_metrics = {}

async def record_request(self,

request_id: str,

context: Dict[str, Any]):

span = self.tracing_client.start_span(

name="ai_request",

attributes={

"request_id": request_id,

**context

}

)

return span

async def record_completion(self,

request_id: str,

metrics: Dict[str, float]):

"""Record completion metrics"""

self.metrics_client.record_metrics({

"total_tokens": metrics.get("total_tokens", 0),

"response_time": metrics.get("response_time", 0),

"model_latency": metrics.get("model_latency", 0)

}, tags={"request_id": request_id})

async def monitor_health(self):

"""Monitor system health metrics"""

while True:

metrics = await self._collect_health_metrics()

await self.metrics_client.record_metrics(metrics)

await asyncio.sleep(60) # Check every minute

async def _collect_health_metrics(self):

return {

"memory_usage": self._get_memory_usage(),

"cpu_usage": self._get_cpu_usage(),

"request_queue_size": await self._get_queue_size(),

"active_connections": await self._get_active_connections()

}

Governance, Privacy, and Responsible AI

Security Requirements

- Role-based access to models and data

- Encryption in transit and at rest

- PII redaction before model ingestion

Ethical AI Framework

- Bias audits (pre- and post-deployment)

- Disclosure for AI-generated content

- Diversity-aware training practices

Compliance Focus: Ensure conformance with GDPR, HIPAA, SOC 2, and ISO/IEC 27001, based on your market and region.

Quantitative Insight: Organizations that embed responsible AI practices from day one reduce compliance incidents and reputation risks by over 50%.

class AISecurityManager:

def __init__(self,

auth_service: AuthService,

rate_limiter: RateLimiter):

self.auth_service = auth_service

self.rate_limiter = rate_limiter

self.security_rules = self._load_security_rules()

async def validate_request(self,

request: AIRequest,

auth_token: str) -> bool:

"""Validate request against security rules"""

# Authenticate user

user = await self.auth_service.authenticate(auth_token)

if not user:

raise AuthenticationError("Invalid authentication token")

# Check rate limits

if not await self.rate_limiter.check_limit(user.id):

raise RateLimitExceeded("Rate limit exceeded")

# Validate input

await self._validate_input(request.content)

# Check permissions

if not await self._check_permissions(user, request):

raise PermissionDenied("Insufficient permissions")

return True

async def _validate_input(self, content: str):

"""Validate input content for security issues"""

for rule in self.security_rules:

if not await rule.validate(content):

raise SecurityValidationError(

f"Input failed security validation: {rule.name}"

)Scalability Patterns

Implementing scalable AI systems requires careful consideration of resource utilization and performance optimization.

Load Balancing

class AILoadBalancer:

def __init__(self,

model_servers: List[ModelServer],

strategy: str = "round_robin"):

self.model_servers = model_servers

self.strategy = strategy

self.current_index = 0

self.server_stats = {}

async def get_next_server(self) -> ModelServer:

"""Get next available server based on strategy"""

if self.strategy == "round_robin":

return await self._round_robin_selection()

elif self.strategy == "least_loaded":

return await self._least_loaded_selection()

elif self.strategy == "response_time":

return await self._response_time_selection()

raise ValueError(f"Unknown strategy: {self.strategy}")

async def _round_robin_selection(self) -> ModelServer:

"""Simple round-robin server selection"""

server = self.model_servers[self.current_index]

self.current_index = (self.current_index + 1) % len(self.model_servers)

return server

async def _least_loaded_selection(self) -> ModelServer:

"""Select server with lowest current load"""

server_loads = await asyncio.gather(*[

server.get_current_load()

for server in self.model_servers

])

return self.model_servers[

server_loads.index(min(server_loads))

]Caching Strategy

class AIResponseCache:

def __init__(self,

cache_client,

ttl: int = 3600,

max_size: int = 10000):

self.cache = cache_client

self.ttl = ttl

self.max_size = max_size

self.metrics = CacheMetrics()

async def get_or_compute(self,

key: str,

compute_fn: Callable) -> Any:

"""Get from cache or compute result"""

# Check cache first

cached_result = await self.cache.get(key)

if cached_result is not None:

await self.metrics.record_hit()

return cached_result

# Compute if not in cache

result = await compute_fn()

# Store in cache

await self.cache.set(key, result, ttl=self.ttl)

await self.metrics.record_miss()

return resultTesting and Validation

Comprehensive testing is crucial for AI systems. Key testing areas include:

Model Testing

class AIModelTester:

def __init__(self,

model: AIModel,

test_cases: List[TestCase]):

self.model = model

self.test_cases = test_cases

self.results = []

async def run_tests(self) -> TestResults:

"""Run all test cases against the model"""

for test_case in self.test_cases:

result = await self._run_single_test(test_case)

self.results.append(result)

return TestResults(self.results)

async def _run_single_test(self,

test_case: TestCase) -> TestResult:

"""Run single test case"""

try:

start_time = time.time()

response = await self.model.generate(test_case.input)

end_time = time.time()

return TestResult(

test_case=test_case,

response=response,

duration=end_time - start_time,

success=await self._validate_response(

response,

test_case.expected

)

)

except Exception as e:

return TestResult(

test_case=test_case,

error=str(e),

success=False

)Conclusion

Building a successful GenAI architecture isn’t about choosing a trendy model; it’s about assembling a reliable, efficient, and secure system that can evolve with your needs.

Enterprises must:

- Choose the right foundation model

- Balance between fine-tuning and prompt engineering

- Set up robust serving infrastructure

- Monitor outputs and user interactions in real time

- Enforce governance, security, and compliance from day one

- Build clean, responsive data pipelines