Best Programming Languages for Artificial Intelligence (AI) Development in 2025

Artificial Intelligence (AI) is reshaping how people use technology, automate everyday tasks, and create new possibilities. From voice assistants that understand intent to systems that learn from data, AI has become a core part of how products and services evolve.

When starting an AI project, one of the most important decisions you’ll make is choosing the programming language. The right language can make development faster, experiments easier, and results more reliable over time.

This article breaks down the most effective programming languages for AI development, explains when each language works best, and highlights a few that fall short. It also includes a simple cheat sheet to help you pick the one that fits your goals.

What is Artificial Intelligence (AI)?

AI refers to the field of computer science dedicated to creating intelligent machines that can mimic human cognitive functions. These machines can learn from data, identify patterns, make decisions, and solve problems all autonomously. AI encompasses various subfields like machine learning, natural language processing (NLP), and computer vision.

Why Is AI Development Important?

AI is changing how industries operate, from healthcare and finance to logistics and retail. Companies are rapidly adopting intelligent systems to automate work, improve decisions, and deliver more personalized experiences.

According to the AI Index Report 2025 (Stanford University), global investment in AI grew by 35 percent in a single year, and developers are increasingly relying on specialized AI platforms and tools to build, train, and deploy intelligent systems efficiently. Meanwhile, Precedence Research projects the AI software market will reach USD 407 billion by 2027, up from USD 86.9 billion in 2022.

This surge has created a massive demand for developers fluent in AI programming. The language you choose directly affects how fast you can build, train, and refine models. Data from the AI Index 2025 shows that over 60% of companies (source) prioritize language ecosystems and library support when choosing their tech stack, proof that your choice of code can shape the success of your AI projects.

Top Programming Languages for AI Development

Many programming languages find their niche within AI development. Here are the frontrunners:

1. Python

Python is the first choice for many AI developers because it removes unnecessary complexity from the coding process. Its clean syntax helps you focus on understanding data, building models, and experimenting with ideas without getting slowed down by complicated code structures. The language has grown alongside the AI field, which means you’ll find tools for almost every task you want to tackle, especially with a growing set of AI-powered Python libraries that support everything from data handling to model training.

How is Python used in AI?

Python powers machine learning, deep learning, computer vision, natural language processing, automation pipelines, and rapid prototyping. Whether you’re training your first model or refining a large neural network, Python offers the tools to get you moving quickly.

Popular Python libraries for AI

- NumPy for numerical computation

- Pandas for data cleaning and manipulation

- Matplotlib/Seaborn for visual analysis

- scikit-learn for classical ML models

- TensorFlow / PyTorch for deep learning

- Transformers (Hugging Face) for NLP applications

Why do developers choose Python?

Python gives you a rich ecosystem that solves common AI problems with minimal effort. You’ll find tutorials for nearly every challenge, active community support, and countless pre-built modules that reduce development time. This combination makes learning and building with Python smoother, especially for beginners and teams working through fast iteration cycles.

Where does Python fall short?

Python can feel slow for tasks that demand heavy computation without relying on optimized libraries. It may also struggle when used for systems that rely heavily on low-level performance or strict execution speed.

What is Python best suited for?

Python is ideal for beginners getting into AI, data scientists exploring models, researchers testing ideas, and developers working on machine learning, NLP, or computer vision. It’s the most accessible path if you want to learn AI fundamentals without fighting the language itself.

2. R

R is a powerful choice for AI work that leans heavily on statistics, experimentation, or deep data exploration. It was built for data analysis, which is why many researchers and analysts rely on it when they need precise mathematical insight or want to understand patterns inside complex datasets. Its visualization tools also make it easier to communicate findings clearly.

How is R used in AI?

R is used for statistical modeling, predictive analytics, exploratory data analysis, clustering, and certain machine learning tasks. It’s especially helpful in fields that depend on statistical accuracy, such as healthcare analytics, finance, bioinformatics, and academic research.

Popular R libraries for AI

- ggplot2 for detailed data visualisation

- dplyr for data manipulation

- caret for machine learning workflows

- randomForest for tree-based models

- nnet for simple neural networks

- tidymodels for structured ML pipelines

Why do developers choose R?

R gives you tight control over statistical techniques and makes it easier to interpret model behavior. Its plotting libraries offer clear visual output, which is valuable when you need to explain insights or validate model assumptions. Researchers appreciate how naturally it fits mathematical and analytical workflows.

Where R may not fit AI requirements?

R is less effective for large-scale engineering projects or applications that require high-performance execution. It also lacks the same breadth of deep learning tools found in languages like Python.

Who benefits most from using R?

R suits data scientists, statisticians, academic researchers, and professionals who spend most of their time analyzing data and validating models. It’s a strong choice if your AI work prioritizes accuracy, transparency, and statistical depth.

3. Java

Java is trusted by teams that need stable, long-term AI solutions. Its structured approach, predictable behavior, and wide adoption in enterprise environments make it a strong option when AI features must run within large products or integrated systems. Developers who work in companies with existing Java infrastructure often prefer it because it fits smoothly into their workflow.

How is Java used in AI?

Java supports machine learning pipelines, recommendation systems, natural language processing, fraud detection, and backend services that need AI components. It is also frequently used in enterprise applications where AI must work alongside existing business logic and large codebases.

Popular Java libraries for AI

- Deeplearning4j for deep learning

- Weka for classical machine learning

- Mallet for NLP and topic modelling

- Neuroph for building small neural networks

- Java-ML for ML algorithms without heavy dependencies

Why do developers choose Java?

Java offers consistent performance and is easier to maintain across large teams. Many companies already use Java for their core systems, which makes it practical to extend solutions with AI without switching languages. Its strong typing system can also reduce errors while building complex workflows.

Where can Java be challenging in AI projects?

Java can feel heavy when working through experimental ideas or trying to iterate quickly. It’s less convenient for rapid prototyping, and its syntax requires more boilerplate compared to languages like Python.

When is Java the right choice?

Java fits developers working in enterprise environments, teams maintaining long-term software products, and projects where AI needs to run within a large application ecosystem. It’s a reliable option for production-grade AI features that need consistency over time.

4. C++

C++ appeals to developers who need precision and control. It offers low-level access to memory and hardware, which is why it’s often used when AI workloads push systems to their limits. Tasks that involve heavy computation or require fine-tuned optimization benefit from the efficiency C++ provides.

How is C++ used in AI?

C++ plays an important role in areas like computer vision, reinforcement learning, robotics, signal processing, and high-performance model deployment. Many deep learning frameworks use C++ under the hood, even if developers interact with them through Python.

Popular C++ libraries for AI

- TensorRT for optimised inference

- OpenCV for computer vision

- Caffe for deep learning

- dlib for ML and CV tasks

- Shark ML for classical machine learning

- MLPack for fast ML algorithms

Why do developers choose C++?

C++ gives you the ability to squeeze more performance from your hardware and tune your model execution for demanding environments. It’s useful when latency, memory management, or execution speed directly impact the quality of the system. Many production AI engines rely on C++ at their core for this reason.

Things to consider before choosing C++

C++ has a steeper learning curve, and writing or debugging code can take more effort. It’s not ideal for early experimentation or quick model iterations, especially compared to Python.

Who does C++ work best for?

C++ suits developers building AI features for robotics, autonomous systems, computer vision pipelines, embedded devices, and scenarios where efficiency is a priority. It’s also a good choice for engineers working on the backend of AI frameworks or optimizing model performance.

5. Julia

Julia attracts developers who need speed without giving up ease of use. It was built for scientific computing, which makes it a strong fit for mathematical modeling and AI research. The syntax feels familiar to Python users, but the performance often comes closer to low-level languages, which appeals to teams experimenting with complex numerical tasks.

How is Julia used in AI?

Julia is used for deep learning research, numerical optimization, simulation-based modelling, and machine learning workflows that rely heavily on mathematical precision. It’s also gaining attention in fields like climate modelling, fintech analytics, and large-scale scientific experiments.

Popular Julia libraries for AI

- Flux.jl for deep learning

- Knet.jl for neural networks

- MLJ.jl for machine learning pipelines

- DataFrames.jl for data manipulation

- CUDA.jl for GPU acceleration

- DifferentialEquations.jl for scientific modelling

Why do developers choose Julia?

Julia delivers high performance while keeping the code clean and easy to write. It works well for workloads that mix heavy mathematics with experimentation, and its ability to call Python, R, and C libraries gives developers flexibility without starting from scratch.

What might hold Julia back

Its ecosystem is smaller compared to more established languages. Some libraries lack the depth found in Python, which can slow things down if you need niche tools or advanced integrations.

Who should pick Julia?

Julia suits researchers, scientists, and engineers working with mathematically intense AI models. If your work involves simulations, optimization problems, or custom deep learning algorithms, Julia gives you speed and clarity in one package.

6. Scala

Scala appeals to developers who work with large datasets and distributed computing. Its blend of functional and object-oriented programming gives teams flexibility, especially when handling complex AI pipelines. Since Scala runs on the JVM, many organizations use it where performance and long-term maintainability matter.

How is Scala used in AI?

Scala is commonly used for big data processing, large-scale machine learning, streaming analytics, recommendation engines, and AI systems built on Apache Spark. It’s a strong choice when your AI workload depends heavily on data pipelines rather than model experimentation alone.

Popular Scala libraries for AI

- Spark MLlib for machine learning at scale

- Breeze for numerical computing

- Smile for ML algorithms

- Akka for concurrency support in distributed systems

- TensorFrames for TensorFlow integration with Spark

Why do developers choose Scala?

Scala fits naturally into environments where data volume is a daily challenge. Its tight integration with Spark makes it easier to build and manage ML workflows that involve huge datasets. Teams that value type safety and structured pipelines often prefer Scala for long-running AI projects.

Potential downsides of using Scala for AI

Scala has a learning curve, especially for developers unfamiliar with functional programming. It also doesn’t have as many AI-specific libraries as Python, which can slow early experimentation.

When is Scala a strong option?

Scala suits engineers working with Spark-based AI pipelines, large data infrastructures, and distributed systems. It’s a solid fit if your AI work is deeply tied to data engineering challenges.

7. JavaScript

JavaScript is a practical choice for AI features that need to run inside web applications. Its widespread use on both the client and server side allows developers to build interactive AI tools without switching to another language. With growing support from ML libraries, JavaScript has expanded beyond UI work and now plays a role in lightweight machine learning tasks.

How is JavaScript used in AI?

JavaScript powers browser-based machine learning, on-page predictions, chatbot interfaces, data visualization dashboards, and AI-driven user interactions. It’s also used in Node.js environments for server-side AI tasks and real-time processing in web applications.

Popular JavaScript libraries for AI

- TensorFlow.js for browser and Node.js ML

- Brain.js for neural networks

- ml5.js for creative and beginner-friendly ML

- Synaptic for network architectures

- ConvNetJS for deep learning in the browser

Why do developers choose JavaScript?

JavaScript lets you bring AI features directly to users without needing heavy backend setups. It’s ideal for demos, interactive tools, and apps where instant model feedback is important. Developers who already build web apps can use their existing skill set to add AI elements quickly.

Limitations of JavaScript for AI

JavaScript struggles with large datasets and advanced deep learning workloads. Training complex models in the browser can be slow and inefficient, which is why JavaScript is better for small or moderately sized AI tasks.

Where does JavaScript make sense?

JavaScript suits developers building AI-driven web experiences, browser-based ML tools, interactive prototypes, educational apps, and lightweight inference tasks. It’s especially useful for teams that want to integrate AI without shifting their whole tech stack.

8. Lisp

Lisp has a long history in AI research. Its flexible syntax and symbolic processing capabilities made it a favorite for early AI systems, especially those involving reasoning, rules, and expert-based logic. Even though it isn’t as common as modern languages, Lisp still appeals to developers who want maximum control over how programs interpret and manipulate data structures.

How is Lisp used in AI?

Lisp is used in symbolic reasoning, rule-based systems, automated planning, knowledge representation, and prototype development for specialized AI tools. Its macro system allows developers to create highly customized behaviors that suit complex logical workflows.

Popular Lisp libraries for AI

- CLML for machine learning

- Lisp-Stat for statistics and modelling

- ACL2 for formal reasoning

- paip-lisp for classical AI examples

- CLASP for high-performance Lisp development

Why do developers choose Lisp?

Lisp gives developers exceptional flexibility when working with symbolic data and logic-heavy AI tasks. Its macro features let teams extend the language to fit specific needs, making it useful in research settings where experimentation and problem-specific tools matter.

Where Lisp can feel restrictive

The community is smaller, and modern AI libraries are limited compared to mainstream languages. Finding solutions, tutorials, or updated tools can be more challenging.

Where Lisp makes sense

Lisp is suited for AI researchers working with symbolic systems, developers building expert systems, and teams exploring logic-driven AI workflows. It’s valuable when you need fine-tuned control over how an AI system processes rules and knowledge.

9. Prolog

Prolog is built around logic programming, making it very different from mainstream AI languages. Instead of writing step-by-step instructions, you describe relationships and rules, and the language figures out answers based on those definitions. This makes Prolog useful for tasks that involve reasoning, inference, and structured problem-solving.

How Is Prolog Used in AI?

Prolog is used in expert systems, knowledge representation, natural language understanding, theorem proving, and AI planning. It shines when the problem revolves around rules, constraints, and logical deductions rather than heavy numerical computation.

Popular Prolog libraries for AI

- SWI-Prolog for general AI development

- ECLiPSe for constraint solving

- Prolog NLP libraries for parsing and understanding language

- CLP(FD) for finite-domain constraint logic

- YAP Prolog for performance-oriented logic programming

Why do developers choose Prolog?

Prolog handles logic-driven problems naturally. You can express complex relationships with fewer lines of code compared to traditional languages. This makes it appealing for AI systems that depend on rules, decisions, and structured reasoning rather than data-heavy methods.

Limitations of using Prolog in modern AI

Prolog isn’t designed for machine learning or deep learning, and it lacks the ecosystem needed for those areas. Developers may also find its logic-based approach unfamiliar, which slows adoption for mainstream AI use cases.

Who should pick Prolong?

Prolog works well for researchers, educators, and teams building reasoning-based AI such as expert systems or planning tools. It’s valuable when your project demands clear rules and logical inference rather than statistical models.

10. Haskell

Haskell appeals to developers who value precision, purity, and mathematical clarity. Its functional programming style encourages clean, predictable code, which can be useful when working on AI systems that require careful reasoning or formal verification. While not mainstream in AI, Haskell attracts engineers who want strong guarantees about how their models and functions behave.

How is Haskell used in AI?

Haskell is used in rule-based systems, probabilistic modelling, formal logic applications, and research projects that involve reasoning or mathematical proofs. It’s also used in developing interpreters, custom AI tools, and experimentation environments for academic work.

Popular Haskell libraries for AI

- HLearn for machine learning

- TensorFlow Haskell bindings for deep learning

- HLearn-probability for statistical modelling

- hmatrix for linear algebra

- AI Planning libraries for search and reasoning tasks

Why do developers choose Haskell?

Haskell’s type system helps catch errors early and encourages clear modelling of AI concepts. Developers working on theoretical or mathematically heavy AI projects appreciate how predictable and structured the language feels. It supports a more formal approach to building and testing ideas.

Challenges you may face with Haskell

Haskell has fewer AI libraries compared to mainstream languages, and its learning curve can feel steep if you’re unfamiliar with functional programming. These factors make it less ideal for teams aiming to build AI products quickly.

Who should consider Haskell?

Haskell fits researchers, academics, and developers exploring AI concepts that benefit from a strong mathematical foundation. It’s a suitable choice when your AI work involves reasoning, logic, or experimentation in functional programming environments.

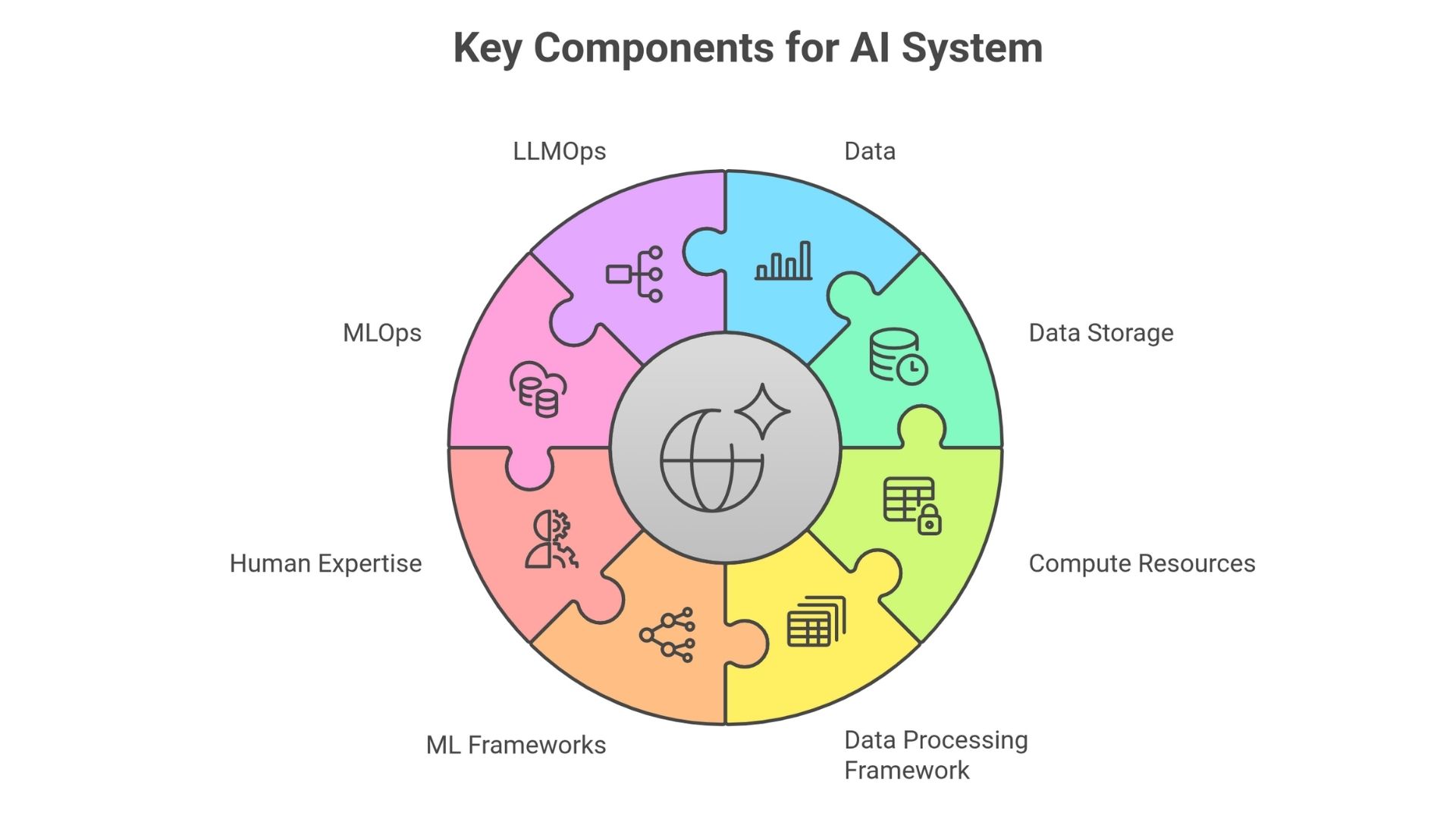

Key Components Required for Building an AI System

Building an AI system means combining multiple moving parts in a way that actually delivers results. Here are the core components you need to get right.

1. High-quality data

Your model’s performance depends on the data you use. Data must be clean, accurately labelled, and truly reflective of the situations the model will face. If the dataset is too small or lacks variety across demographics, inputs, or use cases, your system may struggle when it meets new or different scenarios.

2. Dependable data storage and pipelines

Storing and handling large amounts of data is essential. Cloud-based platforms offer flexible storage, support for structured and unstructured data, and security controls that many older systems lack. You’ll want a setup that allows data to move smoothly into your training and validation workflows, which depends heavily on the strength of your underlying infrastructure for machine learning.

3. Appropriate compute resources

Training AI models puts heavy demand on hardware. CPUs can work for simpler tasks, but for deep learning or large language models you’ll likely need powerful GPUs or TPUs. Choosing less capable hardware can slow down training, delay deployment, and increase cost.

4. Data preprocessing and transformation frameworks

Raw data almost never works out-of-the-box. You’ll need tools or frameworks that clean, transform, and prepare data handling things like missing values, inconsistent formats, or merging sources. Using frameworks that spread these tasks across multiple machines helps when data size grows.

5. Machine learning libraries and frameworks

Rather than building everything from scratch, you benefit from libraries and frameworks that provide tested modules for model building, training, and evaluation. These let you focus more on solving your specific problem and less on reinventing infrastructure.

6. Human expertise

Even with the right tools, you need people who know how to apply them. A project team should cover data engineers, data scientists, ML engineers, domain experts, and possibly DevOps to manage the full lifecycle from model design to deployment and monitoring.

7. MLOps workflows

Once your model is built, it must be maintained. MLOps supports this by setting up pipelines for versioning, deployment, monitoring, and retraining. Without this, models often degrade or fail when conditions change or new data arrives.

8. LLMOps for large-scale language models

If you’re working with large language or generative models, you’ll need tooling for fine-tuning, serving, inference tracking, and governance. These capabilities help ensure your model remains effective, safe, and aligned with your business use case, forming the foundation of efficient machine learning LLMOps.

Conclusion

The choice of programming language for AI development depends on various factors, including project requirements, team expertise, and performance needs. Python's versatility and extensive ecosystem make it a popular choice for many AI projects. However, for computationally intensive tasks or large-scale enterprise applications, languages like Java or C++ might be more suitable.

As the field of AI continues to evolve, new languages and frameworks may emerge, offering unique advantages. It's essential to stay updated with the latest trends and evaluate the most appropriate language for your specific AI endeavors.