Full Guide to Artifical Neural Networks & it's Applications

Introduction

Neural networks have transformed how we approach problem-solving in computing. These brain-inspired systems power everything from the voice assistants on our phones to the recommendation algorithms that suggest what we might want to watch next. But what exactly are they, and how do they work their magic?

What Exactly Is an Artificial Neural Network?

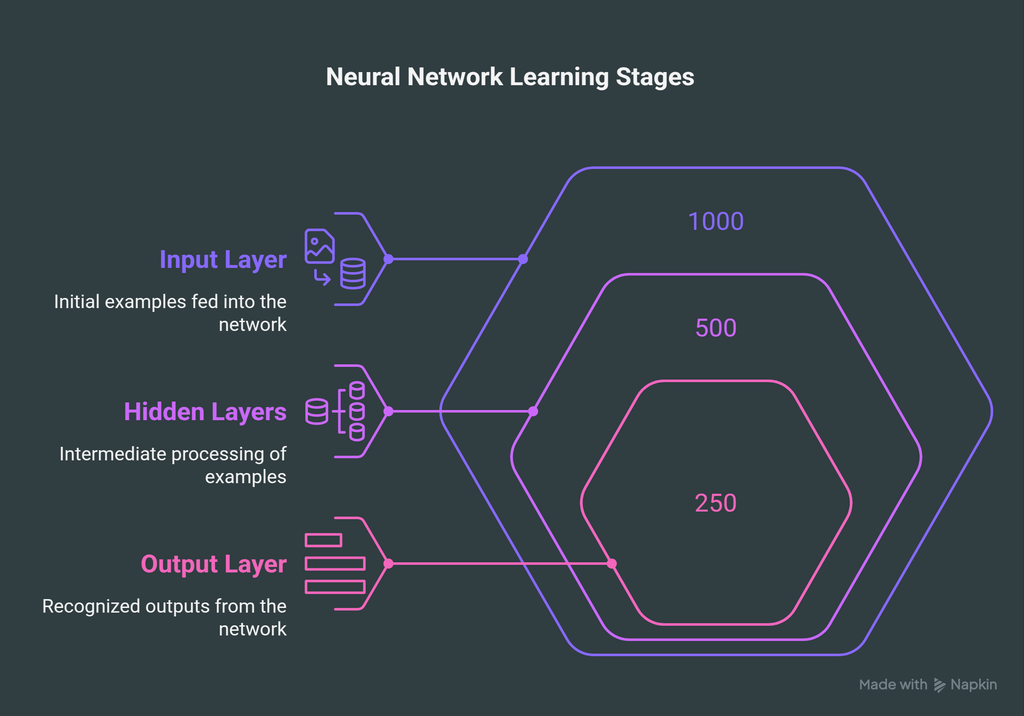

At its core, an artificial neural network is just a system built to mimic how the human brain processes information. Think of it as a rough digital copy of how we learn, remember, and make decisions. It’s made up of layers of nodes (you can think of them as artificial "neurons") that pass information to one another. Each of these nodes takes some input, does a bit of math magic, and passes the result to the next layer.

The more layers and nodes an ANN has, the more complex patterns it can learn. That’s where the term "deep learning" comes in—those are just neural networks with many layers. It’s like giving the system more brainpower to understand trickier problems.

Why Are They Important in Modern Computing?

Neural networks are essential because:

- Adaptability – They learn from data, improving over time.

Efficiency – Can process vast amounts of data faster than traditional algorithms.

Versatility – Applicable across nearly every industry.

Human-like Reasoning – Enable machines to understand, interpret, and interact like humans.

- Foundation of Deep Learning – Neural networks are the building blocks of deep learning, a subset of AI driving most modern tech.

How Do Neural Networks Learn?

You feed the network a huge pile of examples. Let’s say you want it to recognize handwritten numbers. You show it thousands of images labeled with what number they represent. The network makes a guess, compares it to the correct answer, and then tweaks its internal settings a little bit to do better next time. That process happens over and over again until the system gets pretty good at recognizing those numbers.

This trial-and-error method is powered by something called "backpropagation," which is basically just the network learning from its mistakes.

Each connection between neurons has a "weight" that strengthens or weakens the signal. Networks learn by adjusting these weights through a process called backpropagation, essentially figuring out which connections matter most for getting the right answer.

How Neural Networks Learn

1. Forward Propagation

Forward propagation is the first step in how a neural network processes data.

How it works:

Input data flows through the network layer by layer.

- Each neuron applies weights to the inputs, adds a bias, and passes it through an activation function like ReLU, sigmoid, or tanh.

- The output is a prediction (e.g., is this a cat or a dog?).

2. Loss Functions – Measuring the Mistake

Once the network makes a prediction, it needs to know how wrong it was.

- A loss function quantifies the error between predicted output and the actual output.

- The goal: Minimize the loss so the predictions get better.

Common Loss Functions:

- Mean Squared Error (MSE) – for regression problems

- Cross-Entropy Loss – for classification problems

3. Backpropagation – Learning From the Mistake

Backpropagation is where the network actually learns.

It calculates how much each weight contributed to the error.

- Then, it adjusts the weights in the opposite direction of the error to reduce it.

This is done using a bit of calculus (derivatives), but the idea is simple:

"Change the weights just enough to make the next prediction better."

4. Optimization Techniques – Fine-Tuning the Network

Backpropagation tells us what direction to go, but optimization techniques tell us how fast and how far to move.

1. Gradient Descent

- The most basic optimization algorithm.

- Gradually adjusts weights in the direction of the steepest descent (lowest error).

But it can be slow, get stuck in local minima, or oscillate.

2. Adam Optimizer (Adaptive Moment Estimation)

- A more advanced technique that combines:

- Momentum (past gradients)

- Adaptive learning rates (different speeds for different weights)

- It’s faster and more stable than basic gradient descent.

Getting Started with Neural Networks

If you're curious about experimenting with neural networks yourself, there are plenty of accessible entry points:

Common Types of Neural Networks

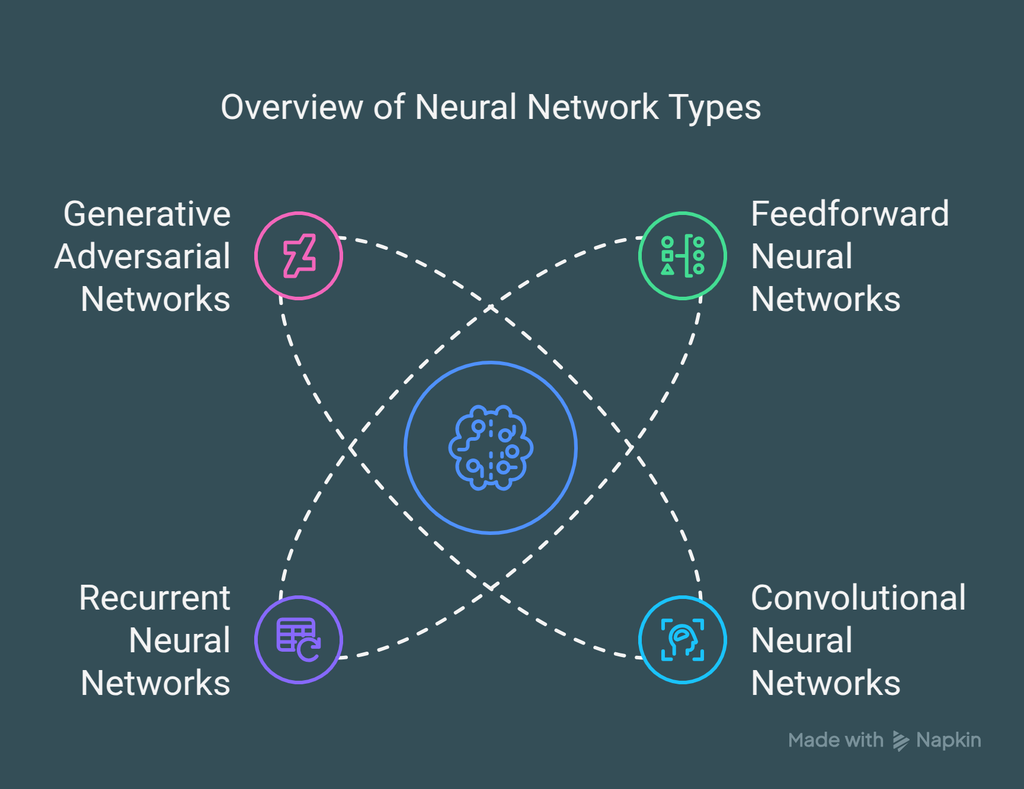

Neural networks aren’t one-size-fits-all. Here are a few of the main types and what they’re typically used for:

1. Feedforward Neural Networks (FNNs)

These are the simplest kind. Data flows in one direction, from input to output. No loops, no going back. These are used for basic classification tasks—like deciding if an email is spam or not.

2. Convolutional Neural Networks (CNNs)

These are the go-to for anything involving images. CNNs have special layers that can detect features like edges, shapes, and textures. That’s what powers facial recognition, object detection, and even self-driving car vision systems.

3. Recurrent Neural Networks (RNNs)

These networks are all about sequences. They can look at past information to make better guesses about what’s next. Perfect for things like language translation, speech recognition, or even predicting stock prices.

4. Generative Adversarial Networks (GANs)

Ever heard of AI-generated art? That’s probably a GAN at work. These networks are actually two systems in one—one creates fake data, the other tries to spot the fakes. Together, they push each other to get better.

Training an Artificial Neural Network (ANN): Step-by-Step

Training an Artificial Neural Network is the core of building an intelligent system. It’s where the model moves from random guesses to making smart, data-driven predictions. Whether you're recognizing images, classifying emails as spam, or predicting stock prices, the training process remains fairly consistent. Let’s break it down.

_ Step-by-Step - visual selection.png)

1. Data Preprocessing – Preparing the Input

Before feeding any data into a neural network, it must be clean, consistent, and formatted correctly. Think of it like training a student: you wouldn’t teach from a messy, incomplete textbook. The model is no different.

Key steps in data preprocessing:

- Handling Missing Values: Missing or null values can mislead the network. These can be filled in (imputed) using the mean/median, or simply removed.

- Normalization/Standardization: Neural networks perform better when inputs are within a similar scale. Normalization brings data to a range (typically 0–1), while standardization transforms data to have a mean of 0 and a standard deviation of 1.

- Categorical Data Encoding: Neural networks work with numbers, not text. So, labels like "Male" or "Female" are converted into numbers using techniques like label encoding or one-hot encoding.

- Splitting the Data: To avoid overfitting and measure real-world performance, we split data into:

- Training set: Used to train the model (usually 70–80%).

- Validation set: Optional, used to tune the model’s parameters during training.

- Test set: Used at the end to evaluate the model’s performance on unseen data.

2. Building the Neural Network Model

Once the data is ready, we define the architecture of the neural network. This means deciding how many layers, what type of layers, how many neurons, and what activation functions to use.

1. Components of the model:

- Input Layer: Receives the data. Its size matches the number of input features.

- Hidden Layers: These are where the real "learning" happens. You can have one or many (in deep learning, you often have dozens or hundreds).

- Output Layer: Produces the final result. For binary classification, it’s usually one neuron with a sigmoid activation. For multi-class classification, it uses softmax.

- Activation Functions: These introduce non-linearity so the network can learn complex patterns. Common choices include:

- ReLU (Rectified Linear Unit) – Default choice for hidden layers

- Sigmoid – For binary outputs

- Tanh – Like sigmoid but centered around 0

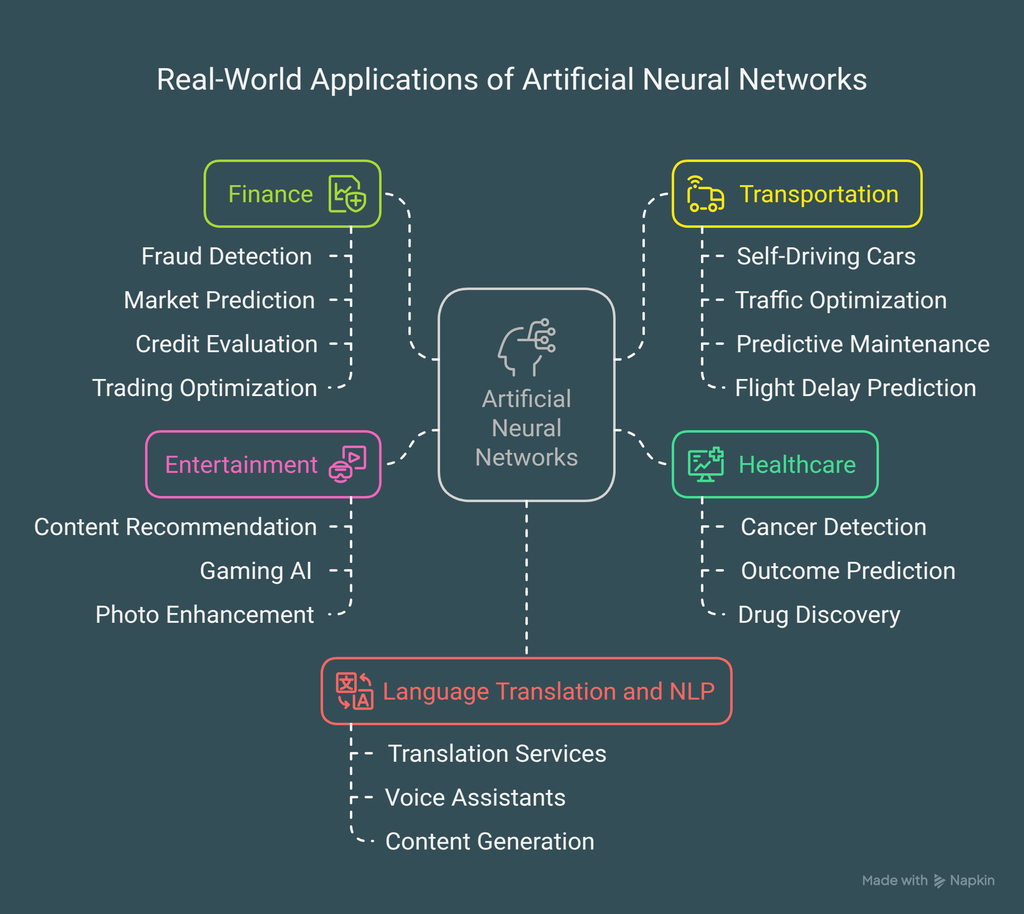

Real-World Applications of Artificial Neural Networks

1. Healthcare

Neural networks are transforming medical diagnosis. They can:

For example, at Stanford University, researchers developed a CNN that identifies skin cancer with the same accuracy as dermatologists.

2. Finance

In the financial world, neural networks:

3. Transportation

The transportation sector uses neural networks for:

4. Entertainment and Recommendations

Those eerily accurate suggestions on streaming services? That's neural networks at work:

- Netflix, Spotify, and YouTube use them to predict what content you'll enjoy

- Gaming AI creates more realistic non-player characters

- Photo filters automatically enhance your snapshots

5. Language Translation and NLP

Neural networks have revolutionized how computers understand and generate language:

Challenges and Limitations

Despite their power, neural networks aren't without their challenges:

1. Need for Large Datasets: Most neural networks need massive amounts of labeled data to train effectively.

Computational Resources: Training complex networks requires significant computing power and energy.

2. Black Box Problem: It's often difficult to understand exactly how a neural network reaches its conclusions, making them problematic for applications where explainability is crucial.

3. Bias and Fairness: Networks can inherit biases present in their training data, potentially leading to unfair or discriminatory outcomes.

The Future of Neural Networks

The field continues to advance at a breathtaking pace. Emerging trends include:

1. More Efficient Architectures: Researchers are designing networks that need less data and computing power.

2. Neuromorphic Computing: Hardware specifically designed to run neural networks more efficiently.

3. Multimodal Learning: Networks that can process different types of data simultaneously (like text, images, and sound).

4. Few-Shot Learning: Teaching networks to learn from just a handful of examples, more like how humans learn.

Conclusion

Artificial neural networks have come a long way from their theoretical beginnings. They've become an indispensable tool in tackling complex problems across virtually every industry. As they continue to evolve and computing power increases, we can expect these versatile systems to find even more applications, further transforming how we work, play, and live.

Whether you're a developer looking to implement machine learning in your next project, a business leader exploring new technological horizons, or simply someone curious about this fascinating field, understanding neural networks provides valuable insight into one of the defining technologies of our time.