What is an Autoregressive Language Model? What are its types and Usage.?

What is an Autoregressive Language Model?

If you’ve ever wondered how AI is able to generate text that seems so natural, or how your phone predicts the next word you want to type, you’ve encountered the magic of autoregressive language models (ARLMs). In this blog, we will break down the concept of autoregressive language models, explain how they work, explore their different types, and provide examples of their real-world applications.

By the end of this post, you’ll have a solid understanding of autoregressive language models and their significance in the world of artificial intelligence.

Simply put, an autoregressive language model is a type of AI model that generates text by predicting one word at a time based on the words that came before it. The word "autoregressive" itself hints at how it works—'auto' means "self," and 'regressive' means "backward-looking." So, an autoregressive model predicts the next word by looking backward at the previously generated text.

Let’s break it down with an example:

Imagine you’re typing a message, and you’ve already written the words "I love." Now, based on these two words, an autoregressive model tries to predict the next word, which might be "you" or "chocolate." The model uses probabilities to decide which word is most likely to follow the given context.

Every new word it generates is based on all the words before it, one word at a time.

Key Characteristics of Autoregressive Models:

- Sequential: They generate text step by step, predicting one word (or token) at a time.

- Context-dependent: Every prediction depends on the previously generated words.

- Probabilistic: They assign probabilities to each possible next word and choose the most likely one.

Types of Autoregressive Language Models

Autoregressive models are not just a single tool—they come in several types, each tailored to specific tasks. Here are the major types:

GPT (Generative Pre-trained Transformers):

GPT models, like GPT-3, are among the most popular examples of autoregressive models. These models are trained on large datasets and can generate human-like text based on prompts. GPT models are versatile and have been used in a variety of applications, from writing essays to creating dialogue for chatbots.

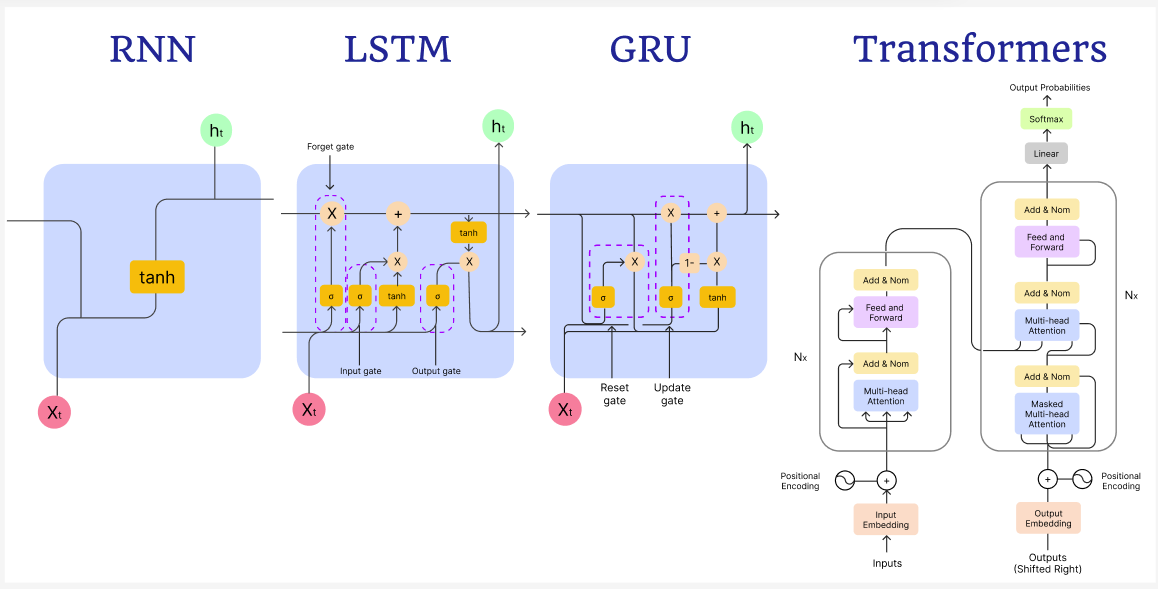

RNN (Recurrent Neural Networks):

RNNs are one of the earliest types of autoregressive models used in natural language processing (NLP). They work well with sequential data but have some limitations, especially when dealing with long sentences or paragraphs because they struggle to remember information from earlier parts of the text.

LSTM (Long Short-Term Memory) and GRU (Gated Recurrent Units):

LSTM and GRU are improvements over RNNs, designed to solve the problem of "forgetting" earlier context. They are often used in tasks that involve sequential data, like speech recognition, language translation, and text generation.

Transformer Models:

Transformers, especially in the autoregressive form, have become the go-to model for language tasks. They are faster and more efficient than RNNs or LSTMs because they can process words in parallel, not just sequentially. The most famous transformer-based autoregressive model is the GPT series.

How Does an Autoregressive Model Work ?

Let’s take a deeper dive into the mechanics of how autoregressive models operate. While the technicalities can get quite complex, the basic process can be simplified into these steps:

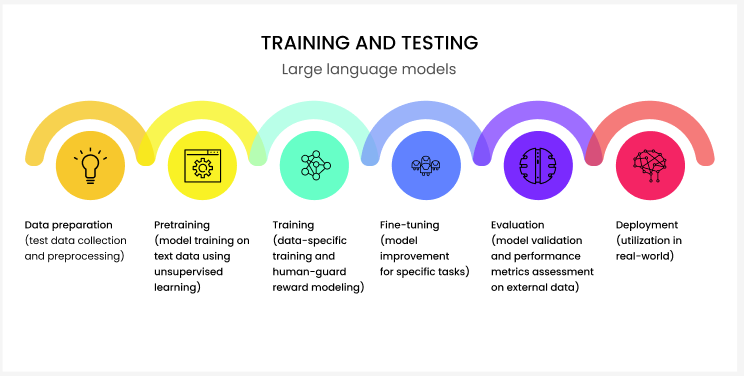

- Training on Text Data: Autoregressive models are trained on vast amounts of text data. During training, the model learns patterns, grammar, context, and even the relationships between words. It analyzes sequences of text and adjusts its internal parameters to better predict the next word in the sequence.

- Generating Text: When it’s time to generate text, the model uses what it learned during training. You give it a starting point (called a "prompt"), and it predicts the next word. After generating the first word, it updates the context and predicts the next word based on the new sequence, and so on, until it produces a complete sentence, paragraph, or even an entire article.

For example, if you give a GPT model the prompt "Once upon a time," it might generate a fairy tale based on that starting text.

Common Applications of Autoregressive Models

Autoregressive models are incredibly versatile and are used in a variety of applications across industries. Here are some common use cases:

Text Generation:

One of the most visible uses of autoregressive models is in text generation. Whether it’s generating product descriptions for e-commerce sites, writing news articles, or even composing poetry, these models can produce human-like text based on the initial input.

Chatbots and Virtual Assistants:

Many of the chatbots and virtual assistants we interact with daily, like Siri or Alexa, rely on autoregressive models to generate responses that feel natural and contextual. When you ask a chatbot a question, it generates a response one word at a time, based on what you’ve said before.

Language Translation:

Autoregressive models are also used in language translation tools like Google Translate. By generating sentences one word at a time, they can accurately translate text from one language to another while keeping the meaning intact.

Speech Recognition and Summarization:

In speech recognition software, autoregressive models help transcribe spoken words into text. Similarly, they can summarize long articles or documents by generating shorter, concise versions.

Why are Autoregressive Models Important?

Autoregressive models have become a backbone of modern AI for several reasons:

Versatility:

They are used in a wide range of applications, from customer service chatbots to automated content creation. The ability to generate coherent and contextually accurate text makes them highly valuable in multiple industries.

Adaptability:

These models can be fine-tuned for specific tasks or trained on large, general datasets. This adaptability makes them useful in both narrow, specialized applications and broad, general ones.

Continuous Improvement:

Thanks to innovations like transformers, autoregressive models are getting better at generating text that is more relevant, accurate, and human-like.

Frequently Asked Questions (FAQs)

Here are some common questions people ask about autoregressive language models: