Effective & Fast Data Streaming for Machine Learning Projects

When you're working on a machine learning project, getting the right data to your models at the right speed can make or break your results. A slow or clunky data pipeline can cause bottlenecks, waste time, and mess up predictions. On the flip side, a well-structured data streaming setup keeps things moving smoothly, ensuring your models are always learning from fresh, relevant data.

So, how do you make data streaming effective and fast without overcomplicating things? Let's break it down.

Why Data Streaming Matters for Machine Learning

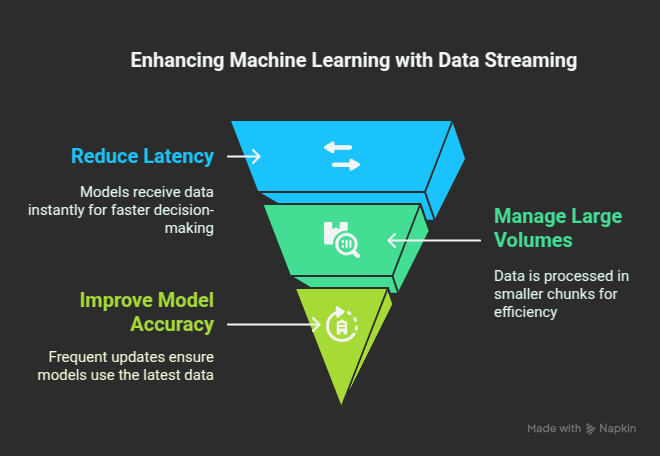

Most machine learning projects rely on data that’s constantly changing—think stock prices, user activity, IoT sensor data, or online purchases. Traditional batch processing methods work fine for some use cases, but when you need continuous updates, data streaming is the way to go. It helps with:

Reducing latency – Your models get data as soon as it’s available, improving decision-making speed.

Handling large volumes – Instead of processing everything at once, streaming allows you to work with data in smaller, manageable chunks.

Improving model accuracy – Frequent updates mean models learn from the latest data instead of outdated information.

Key Elements of a Good Data Streaming Setup

Before you dive in, it’s important to set up the right foundation. Here’s what you should focus on:

-2.png&w=1920&q=75)

1. Picking the Right Streaming Framework

There are a bunch of tools out there, but the best choice depends on your needs. Here are some popular options:

Apache Kafka – Great for high-throughput messaging and widely used in big data applications.

Apache Flink – If you need advanced stream processing with complex computations.

Apache Spark Streaming – A good option if you’re already using Spark for batch processing.

AWS Kinesis – A cloud-native option that integrates well with other AWS services.

Each of these tools has its pros and cons, but the key is to pick something that fits your project size, infrastructure, and technical expertise.

2. Efficient Data Ingestion

Your data is only as good as the way it gets into your system. Some key practices include:

Using lightweight data formats – JSON and Avro are popular, but Parquet is a great choice if you need efficient compression.

Optimizing ingestion speed – Tools like Kafka Connect or Flume can help move data quickly without clogging up resources.

Handling duplicates – Data streams can sometimes send the same event multiple times, so use deduplication strategies like unique event IDs.

3. Processing Data on the Fly

To get the most out of data streaming, you need a good processing setup. This means:

Windowing – Instead of analyzing every single event, group them into time windows (e.g., every 10 seconds) for more manageable processing.

Filtering & transformation – Cleaning data in transit keeps your system lean and your models accurate.

Handling missing data – Implement fallback mechanisms like interpolation or default values to prevent gaps in analysis.

4. Managing Storage and Access

Not all streamed data needs to be kept forever. Decide on:

Short-term storage – Keep immediate data in memory (like Redis) for quick access.

Long-term storage – Store important historical data in a data lake or warehouse like Amazon S3 or Google BigQuery.

Partitioning – Organizing data by time or category speeds up access and processing.

5. Monitoring and Scaling

Even the best setups can hit snags, so keep an eye on things with:

Metrics tracking – Use tools like Prometheus or Grafana to monitor data flow, latency, and errors.

Auto-scaling – Cloud platforms let you scale up or down based on traffic, saving costs and preventing slowdowns.

Alerting systems – Set up notifications for anomalies like data spikes or unexpected delays.

Steps for Implementation

1. Define Your Use Case and Requirements

- Identify data sources and the type of data you’ll be streaming.

Determine the required update frequency and volume.

- Clarify whether you need instant processing, event-driven actions, or periodic updates.

2. Choose Your Streaming Framework and Infrastructure

Select a tool like Kafka, Flink, Spark, or AWS Kinesis based on your needs.

Decide between cloud-based or on-premises infrastructure.

- Set up the necessary clusters, servers, or managed services.

3. Design Your Data Ingestion Pipeline

Connect sources like databases, APIs, IoT devices, and log systems.

Implement data connectors (Kafka Connect, Flume, or custom ETL).

- Use lightweight data formats like JSON, Avro, or Parquet for efficiency.

4. Process Data in Motion

Apply transformations such as filtering, aggregations, and deduplication.

Define time windows for event grouping if needed.

- Handle missing data with fallback mechanisms.

5. Store and Serve the Processed Data

Use in-memory stores (Redis, Memcached) for quick lookups.

Store historical data in cloud storage (S3, BigQuery, Delta Lake).

- Structure data in partitions for faster querying and retrieval.

6. Monitor, Optimize, and Scale

Deploy monitoring tools like Grafana, Prometheus, or AWS CloudWatch.

Set up auto-scaling to manage fluctuating data loads.

Fine-tune configurations for better performance.

- Implement alerts for unusual data spikes or delays.

Use Cases: Where This All Comes Together

-2.png&w=1920&q=75)

1. Fraud Detection in Finance

Banks and payment platforms use streaming data to catch fraud in seconds. If a system detects an unusual transaction pattern—like multiple large withdrawals in a short time—it can trigger alerts instantly instead of waiting for a batch process to catch it later.

2. Personalized Recommendation

Streaming data is behind recommendation engines on platforms like Netflix and Amazon. As users interact with content, the system adjusts recommendations in real time, making the experience more engaging and relevant.

3. Predictive Maintenance in Manufacturing

Factories use sensor data to detect signs of wear and tear on machinery. Instead of waiting for a scheduled check-up, companies can fix problems before they cause downtime, saving money and improving efficiency.

Conclusion

Effective and fast data streaming isn’t just about picking the right tool—it’s about making smart choices at every step, from ingestion to processing to storage. The key is to keep things simple, stay flexible, and monitor performance to ensure your machine learning models are always working with fresh, relevant data.

Whether you're building fraud detection systems, recommendation engines, or predictive maintenance solutions, a well-thought-out data streaming setup can give your project a serious edge. Keep it lean, optimize where you can, and let the data flow!