A step-by-step tutorial to deploy machine learning models

Introduction

Deploying machine learning models is a critical phase that transforms experimental models into valuable production systems. While developing models is often the focus of data science education, the deployment process is what brings these models to life in real-world applications. This tutorial walks through the complete deployment process, from preparing your model to monitoring it in production.

Understanding ML Model Deployment

Machine learning deployment is the process of making your trained models available to end-users or other systems. This generally involves:

The deployment method you choose depends on factors like:

.png)

Step 1: Train and Save the Model

.png)

1.1 Prepare the Dataset

Collect relevant data from sources like CSV files, databases, or APIs.

Perform data cleaning: handle missing values, remove duplicates, and normalize values.

Split data into training and test sets (e.g., 80/20 split) to evaluate performance effectively.

1.2 Train the Model

Select an appropriate algorithm such as:

Linear Regression for continuous data prediction

Logistic Regression for binary classification

Decision Trees or Random Forest for complex relationships

Neural Networks for deep learning applications

Train your model using a training dataset.

Optimize hyper parameters using techniques like Grid Search or Random Search.

1.3 Evaluate Model Performance

Measure the model's accuracy, precision, recall, F1-score, and AUC-ROC curve.

Perform cross-validation to check model robustness.

Adjust hyper parameters if needed to improve performance.

1.4 Save the Model

Serialize your trained model using libraries like

jobliborpickle.

# For scikit-learn models

import joblib

# Save the model

joblib.dump(model, 'model.joblib')

# For PyTorch models

import torch

# Save the model

torch.save(model.state_dict(), 'model.pth')

# For TensorFlow/Keras models

model.save('model.h5')Step 2 : Prepare the Preprocessing Pipeline

Ensure all preprocessing steps are captured and can be reproduced:

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import StandardScaler

from sklearn.ensemble import RandomForestClassifier

# Create a pipeline that standardizes the data then applies the model

full_pipeline = Pipeline([

('preprocessor', StandardScaler()),

('model', RandomForestClassifier())

])

# Train the pipeline

full_pipeline.fit(X_train, y_train)

# Save the entire pipeline

joblib.dump(full_pipeline, 'model_pipeline.joblib')Create Requirements File

Document all dependencies required to run your model:

# requirements.txt

numpy==1.21.0

scikit-learn==1.0.2

pandas==1.3.5

flask==2.0.1

gunicorn==20.1.0Step 3 : Containerize the Application Using Docker

Containerization helps ensure consistency across different environments.

3.1 Install Docker

Download and install Docker from Docker's official website.

3.2 Create a DockerFile

FROM python:3.8

WORKDIR /appStep 3: Containerize the Application Using Docker

Containerization helps ensure consistency across different environments.

3.1 Install Docker

Download and install Docker from Docker's official website.

3.2 Create a Dockerfile

COPY . /app

RUN pip install -r requirements.txt

CMD ["python", "app.py"]3.3 Build and Run the Docker Container

docker build -t ml-api .

docker run -p 5000:5000 ml-api3.4 Build and Test the Docker Image

# Build the Docker image

docker build -t ml-model-api .

# Run the container

docker run -p 5000:5000 ml-model-apiStep 4 : Create an API for the Model

To serve your model, create an API using a web framework like Flask or FastAPI.

Using Flask

Install Flask:

pip install flask

Create an API:

from flask import Flask, request, jsonify

import joblib

import numpy as np

app = Flask(__name__)

model = joblib.load('model.pkl')

@app.route('/predict', methods=['POST'])

def predict():

data = request.json['features']

prediction = model.predict(np.array(data).reshape(1, -1))

return jsonify({'prediction': prediction.tolist()})

if __name__ == '__main__':

app.run(debug=True)Step 5: Deploy to a Cloud Service

5.1 Deploying to AWS EC2

Launch an EC2 instance and SSH into it.

Install Docker and pull the image:

docker run -d -p 80:5000 ml-api5.2 Deploying to Google Cloud Run

1. Install the Google Cloud SDK and authenticate:

gcloud auth login2.Deploy the application:

gcloud builds submit --tag gcr.io/YOUR_PROJECT_ID/ml-api

gcloud run deploy --image gcr.io/YOUR_PROJECT_ID/ml-api --platform managed5.3 Deploying to Azure App Service

- Install Azure CLI and login:

az login2.Create an Azure App Service and deploy:

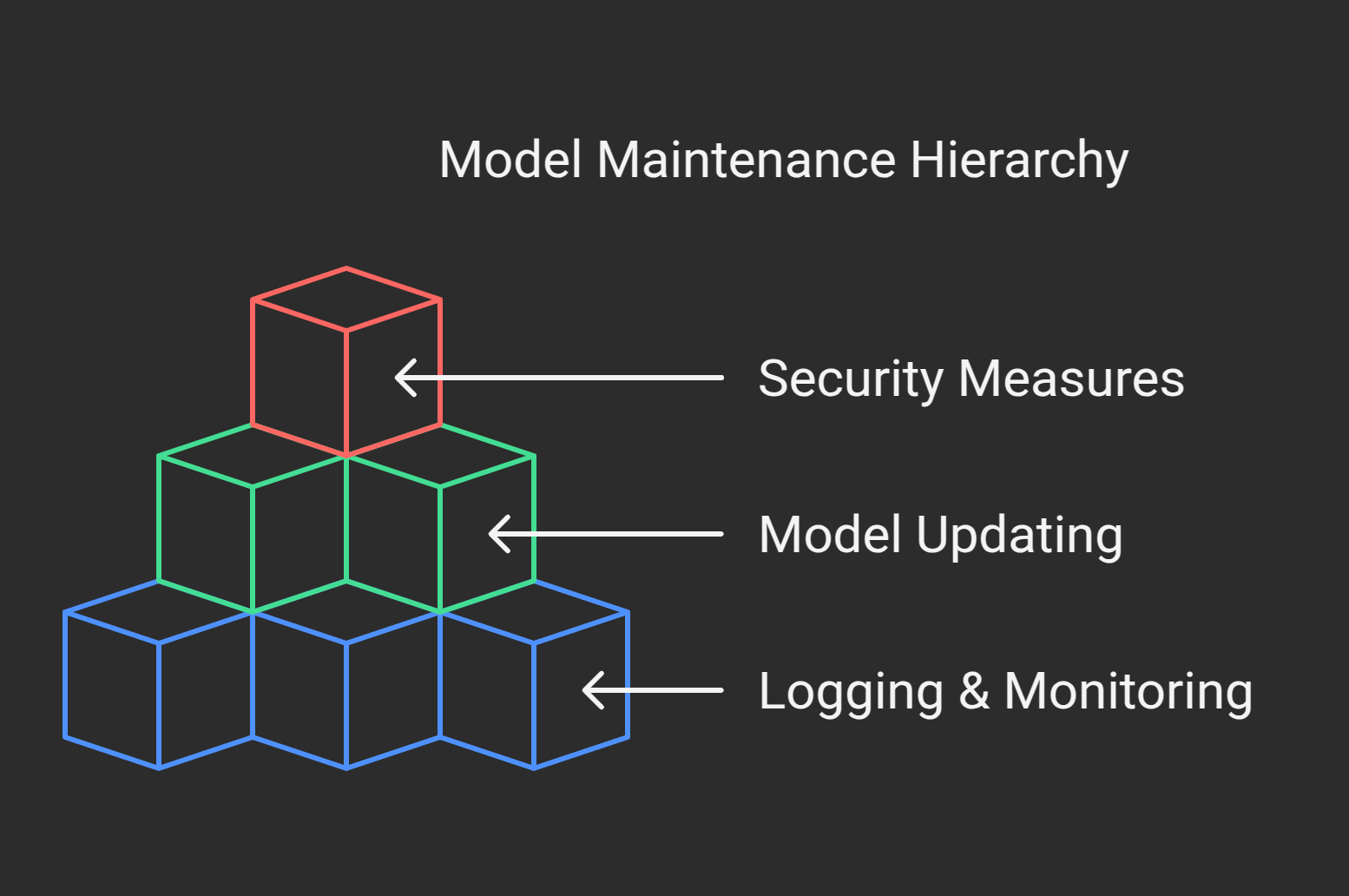

az webapp up --name ml-api --resource-group myResourceGroup --runtime PYTHON:3.8Step 6 : Monitor and Maintain the Model

6.1 Logging and Monitoring

Use tools like Prometheus and Grafana for monitoring API requests and model performance.

Implement logging using Python’s

logginglibrary to track API usage and errors.

6.2 Model Updating and Retraining

Automate model retraining using cron jobs or scheduled tasks.

Store new data and periodically retrain the model with updated datasets.

Use CI/CD pipelines to automate redeployment of new models.

6.3 Security Measures

Secure API endpoints using OAuth, JWT tokens, or API keys.

Implement rate limiting to prevent misuse.

Use HTTPS for encrypted communication.

Step 7: Scaling the Deployment

7.1 Load Balancing and Scaling

Use a load balancer (e.g., AWS Elastic Load Balancer, Nginx) to distribute traffic.

Scale using Kubernetes for container orchestration.

Deploy multiple instances of your API for redundancy and high availability.

7.2 Edge Deployment (On-Device Inference)

Convert the model to ONNX or TensorFlow Lite for deployment on edge devices.

Optimize model size and performance for mobile and IoT devices.

Conclusion

By following these steps, you can successfully deploy a machine learning model for real-world applications. Choosing the right deployment method depends on the use case, scalability, and budget. With proper monitoring and security, your model can serve predictions reliably and efficiently.