How to Load Test your API

In today's digital landscape, APIs (Application Programming Interfaces) are the backbone of modern software applications. They facilitate communication between different systems and services, making them crucial for businesses across various industries. However, as your API usage grows, it's essential to ensure that it can handle increased load without compromising performance. This is where load testing comes into play.

What is Load Testing?

Load testing is the process of simulating real-world load on your API to evaluate its performance under various conditions. It helps you identify:

Maximum operating capacity

Bottlenecks in your system

Breaking points

Response times under different load levels

By conducting thorough load tests, you can ensure your API remains stable and responsive, even during peak usage periods.

The Theory Behind Load Testing

To truly understand load testing, it's important to delve into some underlying concepts and theories:

Types of Performance Testing

Load testing is just one type of performance testing. Others include:

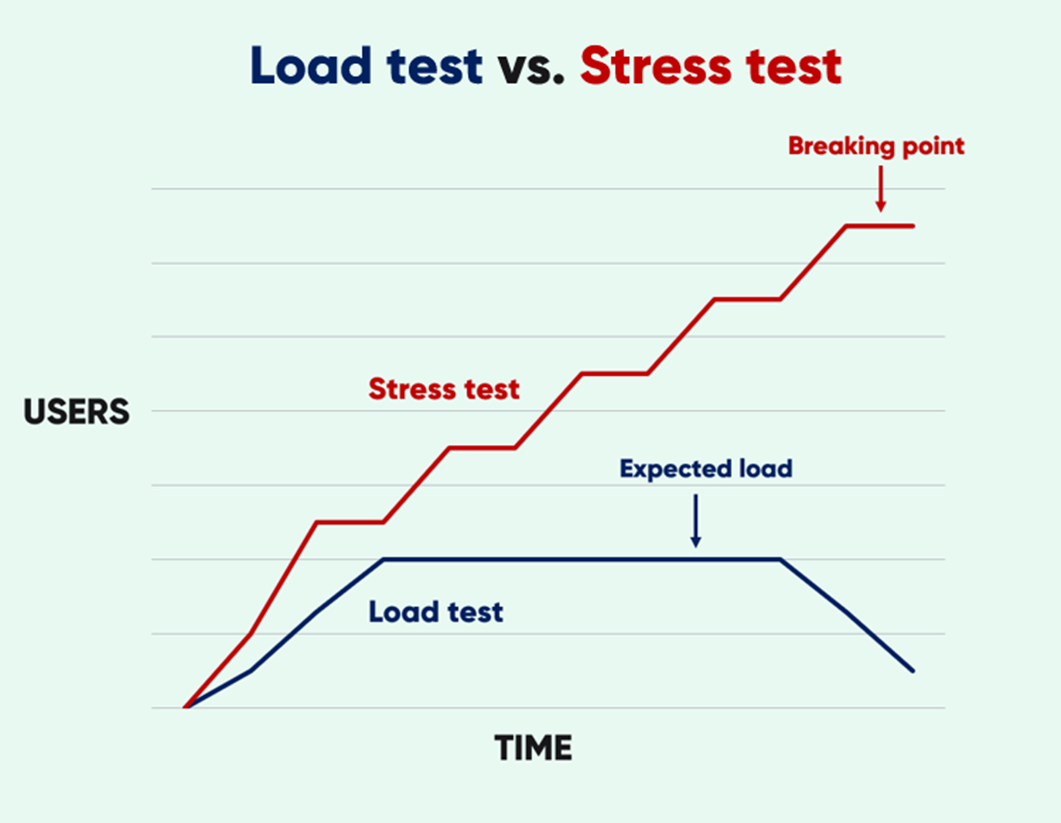

Stress Testing: Pushing the system beyond normal capacity to identify breaking points.

Soak Testing: Running the system at high load for an extended period to identify performance degradation over time.

Spike Testing: Suddenly increasing the load to extreme levels to see how the system responds.

Understanding these different types helps in designing comprehensive test strategies.

Queuing Theory

Queuing theory is a mathematical study of waiting lines, or queues. In the context of APIs, it helps us understand how requests are processed and how waiting times are affected by various factors. Key concepts include:

Arrival Rate: The rate at which new requests come in.

Service Rate: The rate at which requests are processed.

Utilization: The proportion of time the server is busy.

Queue Length: The number of requests waiting to be processed.

Understanding queuing theory can help in interpreting load test results and optimizing system performance.

Little's Law

Little's Law is a fundamental principle in queuing theory. It states that:

L = λW

Where:

L is the average number of items in the queuing system

λ is the average arrival rate of items

W is the average time an item spends in the system

This law can be applied to API performance to understand the relationship between concurrent users, response time, and throughput.

Amdahl's Law

Amdahl's Law is used to predict the theoretical speedup in latency of the execution of a task at fixed workload that can be expected of a system whose resources are improved. It's particularly relevant when considering scaling strategies for your API.

Why is Load Testing Important?

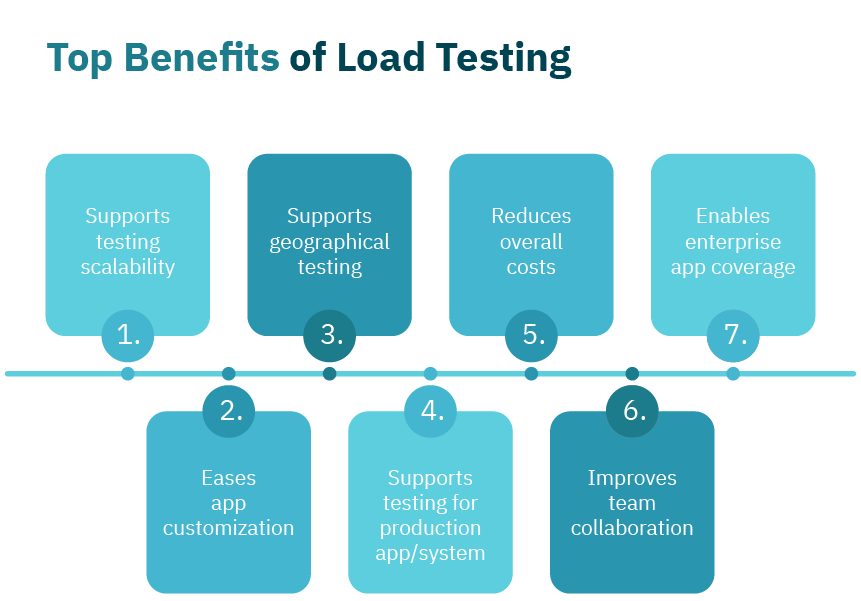

Identify Performance Issues: Load testing helps you discover performance problems before they impact your users.

Capacity Planning: It provides insights into your system's capacity, helping you plan for future growth.

Improve User Experience: By ensuring your API can handle high loads, you maintain a smooth user experience.

Cost Optimization: Load testing can help you optimize resource allocation and reduce unnecessary costs.

SLA Compliance: It helps ensure your API meets Service Level Agreements (SLAs) under various load conditions.

How to Load Test Your API

Step 1: Define Your Testing Goals

Before you start, clearly define what you want to achieve with your load tests. Some common goals include:

Determining maximum requests per second

Identifying response time under different load levels

Finding the breaking point of your system

Step 2: Choose a Load Testing Tool

There are many load testing tools available, both open-source and commercial. Some popular options include:

Apache JMeter

Gatling

Locust

Artillery

K6

Choose a tool that fits your needs and expertise level.

Step 3: Design Test Scenarios

Create realistic test scenarios that mimic actual user behavior. Consider:

Common API endpoints

Typical request patterns

Peak usage times

Gradual load increase

Step 4: Set Up Your Testing Environment

Ideally, you should have a separate environment for load testing that closely resembles your production setup. This ensures accurate results without affecting live users.

Step 5: Run Your Testing

Run Your Testing with a baseline test to establish normal performance. Then, gradually increase the load to observe how your API responds.

Step 6: Monitor and Collect Data

During the test, monitor key metrics such as:

Response time

Throughput

Error rate

CPU and memory usage

Database performance

Step 7: Analyze Results

After the test, analyze the collected data to identify:

Performance bottlenecks

Errors and their causes

Resource utilization patterns

Areas for optimization

Step 8: Optimize and Retest

Based on your findings, make necessary optimizations to your API and infrastructure. This might include:

Code optimization

Database tuning

Caching improvements

Horizontal or vertical scaling

After implementing changes, run your tests again to verify improvements.

Practical Example: Load Testing an ExpressJS API

Let's walk through a practical example of setting up and load testing an ExpressJS API.

Setting Up a Simple ExpressJS API

First, let's create a simple ExpressJS API that we'll use for our load testing example:

const express = require('express');

const app = express();

const port = 3000;

// Simulating a database query with a delay

function simulateDbQuery(delay) {

return new Promise(resolve => setTimeout(resolve, delay));

}

app.get('/api/users', async (req, res) => {

await simulateDbQuery(100); // Simulate a 100ms database query

res.json({ users: ['Alice', 'Bob', 'Charlie'] });

});

app.get('/api/products', async (req, res) => {

await simulateDbQuery(200); // Simulate a 200ms database query

res.json({ products: ['Phone', 'Laptop', 'Tablet'] });

});

app.listen(port, () => {

console.log(`API server running on port ${port}`);

});This simple API has two endpoints: /api/users and /api/products, each simulating a database query with different response times.

Setting Up Artillery for Load Testing

Now, let's set up Artillery to load test our API. First, install Artillery:

npm install -g artilleryCreate a file named load-test.yml with the following content:

config:

target: "http://localhost:3000"

phases:

- duration: 60

arrivalRate: 5

rampTo: 50

name: "Ramping up the load"

defaults:

headers:

Content-Type: "application/json"

scenarios:

- name: "API test"

flow:

- get:

url: "/api/users"

- think: 1

- get:

url: "/api/products"This configuration will ramp up the load from 5 to 50 requests per second over a 60-second period.

Running the Load Test

To run the load test:

Start your ExpressJS server

In a separate terminal, run:

artillery run load-test.ymlAnalyzing the Results

Artillery will provide a summary of the test results, including:

- Completed requests

- Request rate

- Response time statistics

- HTTP response codes

Here's an example of how to interpret these results:

Summary report @ 12:34:56

Scenarios launched: 1650

Scenarios completed: 1650

Requests completed: 3300

Mean response/sec: 55.02

Response time (msec):

min: 101.9

max: 1022.3

median: 204.7

p95: 504.5

p99: 702.1

Scenario counts:

API test: 1650 (100%)

Codes:

200: 3300This output tells us:

- The API handled all requests successfully (3300 requests with 200 status code)

- The mean response rate was 55.02 requests per second

- 95% of requests were completed in 504.5ms or less

- The maximum response time was 1022.3ms

Optimizing Based on Results

Based on these results, you might consider optimizations such as:

Implementing caching to reduce database query times

Scaling your API horizontally to handle more concurrent requests

Optimizing database queries or indexes

Here's an example of how you might implement simple caching:

const express = require('express');

const NodeCache = require('node-cache');

const app = express();

const port = 3000;

const cache = new NodeCache({ stdTTL: 100, checkperiod: 120 });

function simulateDbQuery(delay) {

return new Promise(resolve => setTimeout(resolve, delay));

}

app.get('/api/users', async (req, res) => {

const cacheKey = 'users';

const cachedData = cache.get(cacheKey);

if (cachedData) {

return res.json(cachedData);

}

await simulateDbQuery(100);

const data = { users: ['Alice', 'Bob', 'Charlie'] };

cache.set(cacheKey, data);

res.json(data);

});

// Similar caching for /api/products

app.listen(port, () => {

console.log(`API server running on port ${port}`);

});After implementing optimizations, you should run your load tests again to measure the improvement.

Best Practices for API Load Testing

Start Early: Integrate load testing into your development process from the beginning.

Test Regularly: Conduct load tests regularly, especially before major releases or expected traffic spikes.

Use Realistic Data: Ensure your test data closely resembles real-world scenarios.

Consider Geographic Distribution: If your API serves a global audience, simulate requests from different geographic locations.

Test Edge Cases: Don't just test average scenarios; include edge cases and worst-case scenarios in your tests.

Monitor All Layers: Keep an eye on all layers of your application stack during testing, including databases and third-party services.

Automate Where Possible: Automate your load testing process to ensure consistency and save time.

Correlate Metrics: Look at relationships between different metrics (e.g., how increased load affects memory usage) for deeper insights.

Test Failure Recovery: Include scenarios where parts of your system fail to ensure graceful degradation and recovery.

Advanced Load Testing Techniques

As you become more proficient with basic load testing, consider exploring these advanced techniques:

Chaos Engineering: Intentionally introduce failures into your system during load tests to ensure resilience.

Performance Profiling: Use tools like Node.js's built-in profiler or third-party APM solutions to dive deep into performance bottlenecks.

Database Load Testing: Specifically target your database with read/write intensive operations to ensure it can handle the load.

API Composition Testing: If your API relies on other services, test how it performs when those services are under load or failing.

Contract Testing: Ensure that your API adheres to its contract even under high load conditions.

Conclusion

Load testing is an essential practice for maintaining a robust and scalable API. By following the steps and best practices outlined in this guide, you can ensure your API performs well under pressure, providing a seamless experience for your users and supporting your business growth.

Remember, load testing is not a one-time activity but an ongoing process. As your API evolves and your user base grows, regular load testing will help you stay ahead of performance issues and deliver a consistently high-quality service.

By combining theoretical knowledge with practical implementation using tools like ExpressJS and Artillery, you can create a comprehensive load testing strategy that will keep your API performing at its best, even as your user base and complexity grow.