Detailed Guide to Load Balancing in Cloud Computing

What is load balancing?

- Load balancing is the process of distributing traffic evenly across multiple servers or instances of an application. Instead of routing all traffic to a single server, which can become a bottleneck, a load balancer ensures that requests are efficiently distributed.

- The goal is to optimize resource utilization, avoid overload on any single server, and ensure the system can scale as demand increases.

- In cloud computing, load balancing is a critical element in achieving high availability and scalability. It helps cloud-based applications to efficiently manage resources and traffic, minimizing downtime and preventing performance degradation during peak usage periods.

Types of Load Balancers

.png)

Load balancers come in different types based on their functionality, deployment, and the layers of the OSI model they operate on.

Hardware Load Balancers

Description: These are physical devices specifically designed for load balancing and are usually deployed in data centers. They are highly efficient and often come with advanced features.

Key Features:

- High performance due to purpose-built hardware.

- Can handle massive amounts of traffic.

- Advanced features like SSL termination, DDoS protection, and application acceleration.

Examples: F5 BIG-IP, Citrix ADC (formerly NetScaler).

Use Case: Large-scale enterprise applications requiring high throughput and reliability.

Software Load Balancers

Description: These are software-based solutions that can be installed on standard servers or virtual machines. They are more flexible and cost-effective than hardware load balancers.

Key Features:

Examples:

Use Case: Medium to large-scale applications, especially in cloud environments or containerized architectures.

Cloud Load Balancers

Description: These are managed load balancing services provided by cloud providers, offering scalability and ease of use.

Key Features:

- Fully managed by the cloud provider.

- Seamless integration with other cloud services.

- Auto-scaling and pay-as-you-go pricing.

Examples:

Use Case: Applications deployed in the cloud, such as SaaS products, microservices, and high-availability systems.

Layer 4 Load Balancers

Description: Operate at the transport layer (Layer 4 of the OSI model), routing traffic based on IP address and TCP/UDP ports.

Key Features:

Examples:

Use Case: Applications that require minimal latency and protocol-level routing.

Layer 7 Load Balancers

Description: Operate at the application layer (Layer 7 of the OSI model), routing traffic based on application-specific data like HTTP headers, URLs, or cookies.

Key Features:

Examples:

Use Case: Web applications, APIs, and microservices needing intelligent traffic distribution.

Load Balancing Techniques

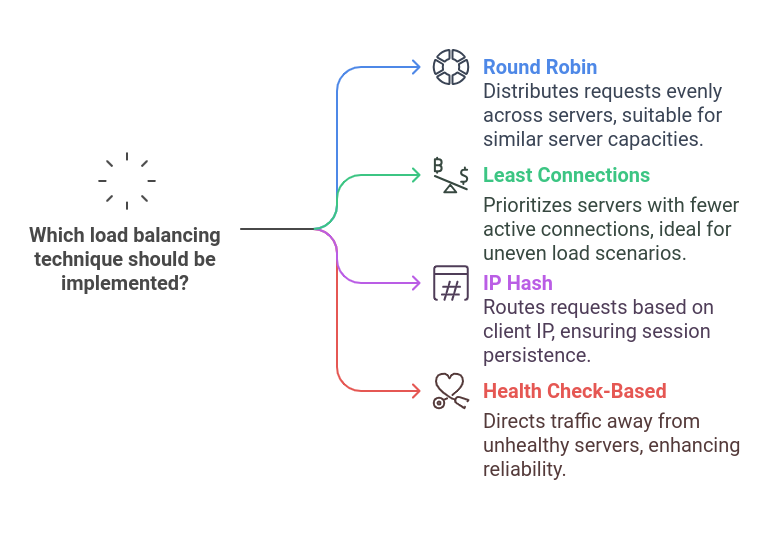

Round Robin Load Balancing

Example Use Case: You have a simple web application with multiple servers, and each server has roughly the same computing power.

How it works: When a user sends a request, the load balancer sends it to the first server in the list. On the next request, it sends the request to the second server, and so on. Once it reaches the end of the list, it starts over.

Practical Example: Let’s assume you have three servers (Server A, Server B, Server C) and a round-robin load balancer.

- Request 1 → Sent to Server A

- Request 2 → Sent to Server B

- Request 3 → Sent to Server C

- Request 4 → Sent to Server A (repeats)

# Configuring a round-robin load balancing method in Nginx (web server)

http {

upstream backend {

server 192.168.1.1;

server 192.168.1.2;

server 192.168.1.3;

}

server {

location / {

proxy_pass http://backend;

}

}

}In this example, requests are equally distributed across all servers without considering their load.

Least Connections Load Balancing

Example Use Case: Your application servers have varying performance capabilities, and you want to balance the load based on the number of active connections each server is handling.

How it works: The load balancer directs incoming requests to the server with the fewest active connections. This ensures that no server is overwhelmed with too many requests.

Practical Example: You have three servers (Server A, Server B, Server C). Server A is handling 10 active requests, Server B is handling 5, and Server C is handling 3.

- Request 1 → Sent to Server C (fewest connections)

- Request 2 → Sent to Server B (second fewest connections)

- Request 3 → Sent to Server A (most connections, but still receives traffic)

- Request 4 → Sent to Server C (still the least connections)

# Configuring least connections in HAProxy (another load balancing solution)

frontend http

bind *:80

default_backend servers

backend servers

balance leastconn

server server1 192.168.1.1:80 check

server server2 192.168.1.2:80 check

server server3 192.168.1.3:80 checkIn this case, the load balancer will prioritize sending traffic to Server C, the least loaded server.

IP Hash Load Balancing

Example Use Case: Your application needs to ensure that a particular client consistently connects to the same backend server (sticky sessions), and you use the client’s IP address for this.

How it works: The load balancer uses a hash function to map the client’s IP address to a specific server. This ensures that all subsequent requests from the same client go to the same server.

Practical Example:

Client IP 192.168.1.100 might always be routed to Server A, while client IP 192.168.1.101 will always go to Server B.

# Nginx IP hash configuration example

http {

upstream backend {

hash $remote_addr consistent;

server 192.168.1.1;

server 192.168.1.2;

server 192.168.1.3;

}

server {

location / {

proxy_pass http://backend;

}

}

}With this configuration, the client with the IP address 192.168.1.100 will always be sent to the same backend server, maintaining session persistence.

Weighted Load Balancing

Example Use Case: Some servers in your application pool are more powerful than others, so you want to send more traffic to the more powerful servers.

How it works: The load balancer assigns a weight to each server. Servers with higher weights receive more traffic, while servers with lower weights receive less.

Practical Example: You have three servers:

- Server A (weight 5)

- Server B (weight 2)

- Server C (weight 1)

Here’s how the load balancing might work:

- Request 1 → Sent to Server A (weight 5)

- Request 2 → Sent to Server A (weight 5)

- Request 3 → Sent to Server B (weight 2)

- Request 4 → Sent to Server A (weight 5)

- Request 5 → Sent to Server B (weight 2)

- Request 6 → Sent to Server C (weight 1)

- Request 7 → Sent to Server A (weight 5)

# Weighted load balancing in Nginx

http {

upstream backend {

server 192.168.1.1 weight=5;

server 192.168.1.2 weight=2;

server 192.168.1.3 weight=1;

}

server {

location / {

proxy_pass http://backend;

}

}

}Here, Server A handles most of the traffic because it has the highest weight, and Server C receives the least amount of traffic due to its low weight.

Health Check-Based Load Balancing

Example Use Case: You need to ensure that traffic is only directed to servers that are currently functioning and healthy.

How it works: The load balancer regularly performs health checks on the servers in the pool. If a server fails the health check, the load balancer stops sending traffic to it until it passes the check again.

Practical Example: You have three servers, and you want the load balancer to only send traffic to servers that are responsive. If Server A becomes unresponsive, it will not receive new traffic.

# Health check configuration example in HAProxy

frontend http

bind *:80

default_backend servers

backend servers

balance roundrobin

option httpchk GET /health

server server1 192.168.1.1:80 check

server server2 192.168.1.2:80 check

server server3 192.168.1.3:80 checkThe health check ensures that traffic is directed only to servers that are healthy and capable of handling requests.

Advantages

- Distributes traffic across multiple servers, ensuring no single server is overwhelmed, which enhances response times and application performance.

- Easily accommodates increasing traffic by adding more servers to the load balancer's pool.

- Ensures servers are utilized optimally, reducing idle resources and preventing overloading.

Disadvantages

- Hardware load balancers and advanced cloud load balancing services can be expensive.

- Administrators need expertise to configure and optimize load balancers effectively.

- Configuring and maintaining load balancers, especially in hybrid or multi-cloud environments, can be challenging.

Conclusion

-1.png)

- Load balancing is an essential part of cloud computing that ensures optimal resource utilization, high availability, and scalability. By effectively distributing traffic across multiple servers or resources, it enhances the performance, fault tolerance, and reliability of cloud applications.

- Whether you're dealing with web applications, microservices, or other cloud-based workloads, a well-configured load balancing strategy is crucial to maintaining a smooth and responsive user experience.

- As cloud applications continue to grow in complexity and user demand, load balancing will remain an integral part of ensuring that these applications can scale dynamically and deliver reliable services to users worldwide.

- Whether you're using round-robin, least connections, or more advanced techniques, load balancing remains an essential tool for managing cloud traffic efficiently.