Top 7 MLOps Platforms to Use in 2025

Top 7 Mlops Platforms to Use in 2025

Getting AI models from a data scientist's laptop into actual production isn't as simple as clicking "deploy." As someone who's been in the trenches with ML teams, I've seen firsthand how the right MLOps platform can be the difference between a model that collects dust and one that delivers actual business value.

In 2025, companies aren't just asking if they should implement machine learning, they're asking how they can do it better and faster. Let's break down the MLOps platforms that are making waves this year, based on what's actually working for teams across different industries.

What Makes a Good MLOps Platform in 2025?

Before diving into specific platforms, let's talk about what matters when choosing one:

Complete workflow coverage

Does it handle everything from training to deployment to keeping an eye on your models once they're live?

Performance at scale

Can it handle your growing model catalog without slowing to a crawl?

Plays well with others

How easily does it connect with your existing tools and data sources?

Learning curve

Will your team need six months of training, or can they hit the ground running?

Cost structure

Are you paying for what you actually use, or for features you'll never touch?

Community backing

Is there a helpful community when you inevitably get stuck at 2 AM?

Platforms

Full Machine Learning Lifecycle Platforms

1. MLflow Enterprise

MLflow has come a long way from its open-source roots. The Enterprise version packs a punch for teams that need both flexibility and structure.

What makes it stand out

End-to-end tracking that captures everything from initial experiments to production metrics

Model registry that works like a true version control system for ML

Incredibly flexible deployment options (cloud, on-prem, hybrid)

Use cases

Finance teams using it for risk assessment models where tracking decision lineage is crucial for compliance

E-commerce companies managing hundreds of product recommendation models with different refresh cadences

Research groups that need to maintain reproducibility while rapidly iterating on models

Recent improvements

The 2024 Q4 update added advanced drift detection that doesn't just tell you that something's wrong but gives you actionable insights on why.

Pricing

Starts at $2,000/month for small teams, with enterprise pricing based on model volume and users. Not the cheapest option, but the ROI is clear for teams losing time to manual processes.

Limitations

The flexibility is both a strength and weakness you'll need to make some architectural decisions rather than getting a completely turnkey solution.

2. Kubeflow Plus

Kubernetes-native and built for teams that want control over their infrastructure while avoiding reinventing the wheel.

What makes it stand out

Exceptional container orchestration that meshes naturally with existing DevOps practices

Pipeline automation that handles complex multi-step workflows

Strong integration with major cloud providers' ML services

Use cases

Healthcare analytics organizations managing hundreds of patient outcome models with varying data requirements

Manufacturing companies deploying models directly to edge devices in factories

Multi-cloud enterprises needing consistent ML workflows across different environments

Recent improvements

The new GUI overhaul in early 2025 finally made it accessible to team members without deep Kubernetes knowledge.

Pricing

The core is open source, with support packages starting at $1,500/month. Enterprise features like advanced security come at additional cost.

Limitations

Still requires more technical know-how than some alternatives, particularly for initial setup.

3. Databricks Machine Learning

Building on their strong data processing foundation, Databricks has created an MLOps environment that naturally extends their lakehouse architecture.

What makes it stand out

Unified analytics and ML platform that eliminates data transfer headaches

Feature engineering capabilities that leverage the power of Spark

Collaborative notebooks that have evolved into true development environments

Use cases

Media companies building recommendation engines directly on viewing data lakes

Retail organizations creating demand forecasting models using petabytes of transaction data

IoT companies processing and modeling sensor data from thousands of devices

Recent improvements

The January 2025 release added advanced explainability tools that help teams understand model decisions without becoming AI experts.

Pricing

Subscription-based with workspaces starting around $3,000/month. Costs increase with compute usage and user count.

Limitations

Getting the most value requires going all-in on the Databricks ecosystem, which may not align with existing investments.

Cloud-Based Machine Learning Platforms

4. Azure Machine Learning Studio

Microsoft's offering has evolved from a somewhat disconnected set of tools into a cohesive platform that particularly shines for teams already invested in the Microsoft ecosystem.

What makes it stand out

Exceptionally smooth integration with Azure data services

No-code options that actually work for straightforward use cases

Enterprise-grade security and compliance features

Use cases

Retail analytics teams maintaining price optimization models connected to Azure data warehouses

Insurance companies using automated ML for policy underwriting models

Healthcare providers deploying compliant patient risk models integrated with existing Microsoft-based systems

Recent improvements

The February 2025 update brought much-needed improvements to the model monitoring dashboard and added automated documentation generation.

Pricing

Consumption-based model with typical costs ranging from $500-$5,000/month depending on workload. Predictable for budgeting but can spike if you're not careful with compute resources.

Limitations

While it works with non-Microsoft tools, you'll definitely feel the friction if your stack isn't Azure-centric.

5. SageMaker Studio Pro

Amazon's advanced MLOps offering continues to be a powerhouse for teams that live in the AWS ecosystem.

What makes it stand out

Unmatched compute options from tiny instances to massive distributed clusters

Feature store that actually saves time rather than creating more work

Built-in experiment tracking that doesn't feel bolted on

Use cases

E-commerce platforms handling millions of personalized product recommendations

Media streaming services managing content recommendation engines with feast-or-famine traffic patterns

FinTech startups processing large volumes of transaction data for fraud detection

Recent improvements

The new "Model Cards" feature introduced in March 2025 has dramatically improved handoffs between data science and operations teams.

Pricing

Pay-as-you-go model based on compute time, storage, and endpoints. Medium-sized teams typically spend $1,000-$7,000/month.

Limitations

The sheer number of options and configurations can be overwhelming, and AWS-specific terminology creates a learning curve.

6. Google Vertex AI

Google's consolidated ML platform brings together their various AI services with a focus on making advanced capabilities accessible.

What makes it stand out

Pre-trained API access that lets you tap into Google's powerful foundation models

AutoML capabilities that actually produce usable models

Exceptional tools for working with unstructured data like images and text

Use cases

Legal tech companies extracting and classifying information from contracts and documents

Customer service teams building and deploying chatbots with minimal technical expertise

Healthcare researchers leveraging both custom and pre-trained models for medical imaging

Recent improvements

The feature flag system added in December 2024 has made it much easier to gradually roll out model updates to subsets of users.

Pricing

Complex but transparent pricing model based on training compute, prediction requests, and storage. Typical mid-size implementations run $1,500-$8,000/month.

Limitations

Still shows some rough edges where previously separate Google AI products were integrated.

Experiment Tracking and Model Management

7. Weights & Biases Enterprise

What started as a tool for experiment tracking has blossomed into a full-featured MLOps platform with particular strengths in team collaboration.

What makes it stand out

Visualization tools that actually help debug models

Team spaces that facilitate knowledge sharing without forcing rigid workflows

Artifact management that prevents "where's the latest model?" confusion

Use cases

Computer vision teams collaborating on object detection models across multiple sites

Research organizations that need to maintain visibility into parallel experimentation

Educational institutions training students on industry-standard ML workflows

Recent improvements

The project templates introduced in Q1 2025 give teams a running start with best practices baked in.

Pricing

Team plans start at $1,000/month for 10 users, with enterprise pricing based on storage and user counts.

Limitations

While deployment options have improved, its roots as an experiment tracking tool sometimes show when handling production workflows.

Platform Comparison

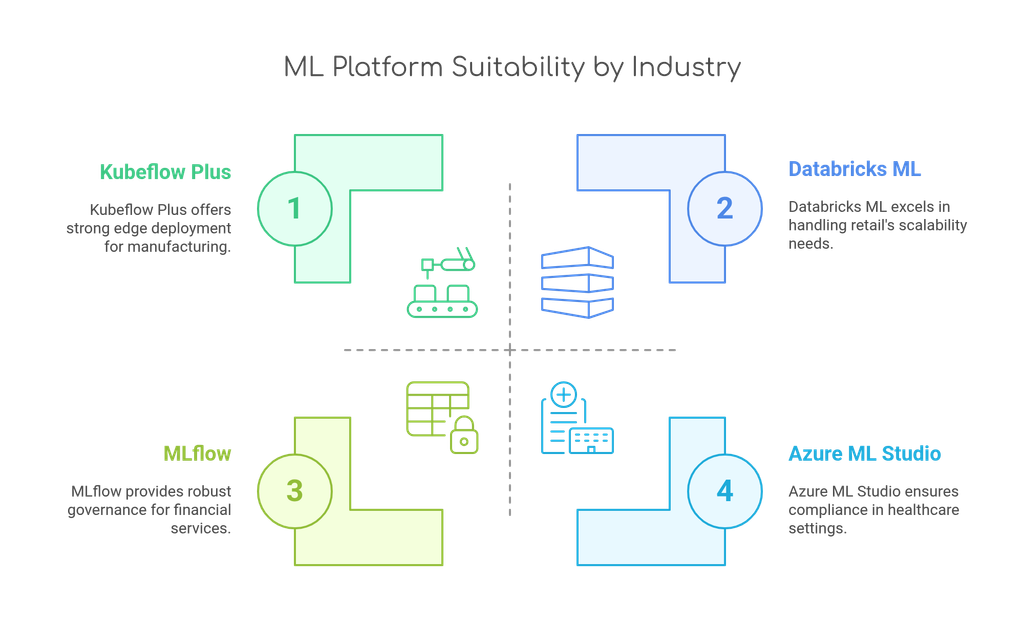

Industry-Specific Considerations

Financial Services

MLflow and SageMaker tend to excel here due to their strong governance features. A banking client I worked with chose MLflow specifically for its model versioning capabilities that helped them satisfy auditors.

Healthcare

Compliance features matter enormously here. Azure ML Studio and Google Vertex AI have strong HIPAA-friendly setups that save months of security review. One medical imaging team found that Vertex AI's specialized vision models gave them a huge head start.

Retail

SageMaker and Databricks are popular choices for their ability to handle seasonal spikes in demand. A major retailer I consulted with uses Databricks ML because their demand forecasting models need direct access to petabytes of transaction data.

Manufacturing

Edge deployment capabilities become crucial here. Kubeflow Plus has an edge in this space with its container-based approach that works well in factory environments with limited connectivity.

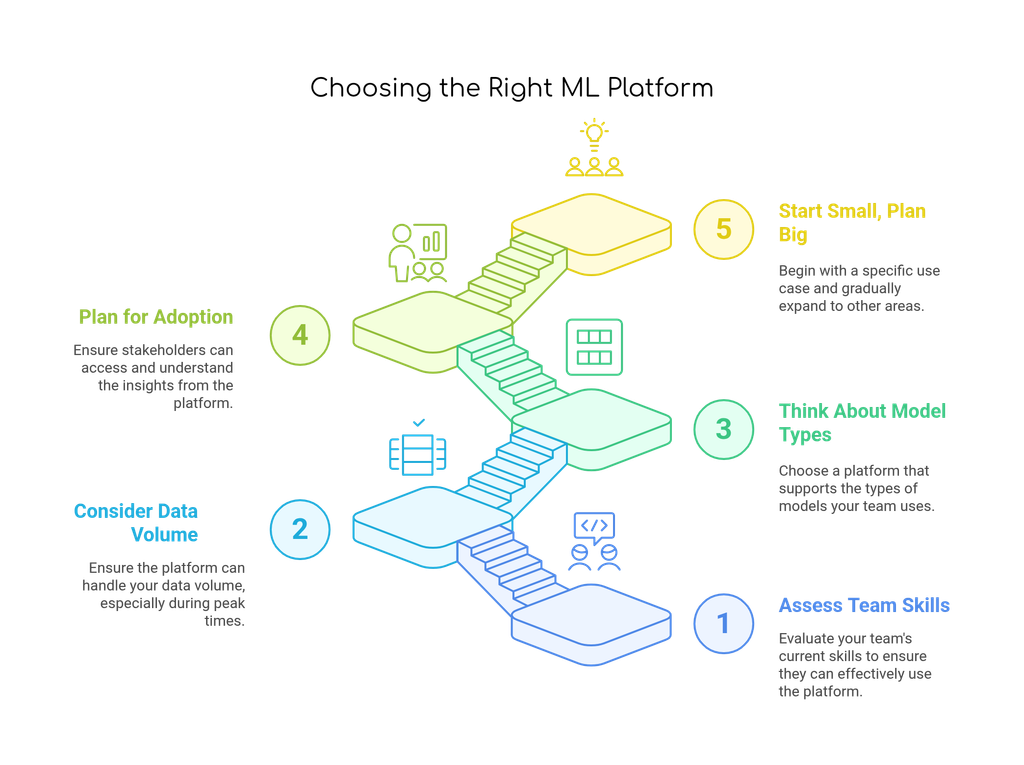

Making the Right Choice

After helping multiple teams implement these platforms, here's my practical advice

Start with your team's skills

The best platform on paper might not be right if your team needs months to learn it. For instance, if you've got strong Kubernetes skills, Kubeflow's learning curve might not be an issue.

Consider your data volume

A retail client I worked with initially chose a platform that couldn't handle their holiday data surge, forcing a mid-year migration nightmare.

Think about model types

Teams working primarily with computer vision might prioritize different features than those focused on time-series forecasting.

Plan for organizational adoption

The most technically impressive platform won't help if stakeholders can't access insights. A manufacturing client succeeded with W&B largely because executives could understand the visualizations.

Start small but plan big

Begin with a specific use case rather than trying to migrate everything at once. A financial services team I advised started with just their fraud models on MLflow before expanding.

Migration Considerations

Switching MLOps platforms isn't like changing your email provider. You're looking at potential disruption to your entire ML workflow. Some practical tips from teams who've done it successfully

Run old and new platforms in parallel during transition

Start with new models rather than migrating existing ones when possible

Budget 2-3x your expected timeline (seriously, it always takes longer)

Document your existing processes before migration, not during

What's Coming Next in MLOps

Looking toward late 2025 and beyond

- Generative AI governance is becoming a core feature as more companies deploy LLMs

- Cross-platform standards are finally emerging, reducing vendor lock-in

- Automated ML lifecycle management is moving beyond just retraining to include data preparation and feature engineering

- Domain-specific platforms are gaining traction for industries with unique requirements

Bottom Line: Which Platform Should You Choose?

If you're looking for a recommendation without qualifiers, I'd point most teams toward MLflow Enterprise in 2025. Its combination of flexibility, strong fundamentals, and growing ecosystem makes it a solid choice that won't back you into a corner.

But the true answer is more nuanced

Already heavily invested in AWS? SageMaker is your path of least resistance.

Working primarily with data in Azure? Azure ML Studio will save you countless integration headaches.

Need to collaborate across distributed teams? W&B Enterprise shines for visibility and communication.

Working with massive datasets? Databricks ML's integrated approach pays dividends.

The MLOps platform you choose in 2025 should fit not just your technical needs today, but where your ML practice is heading over the next few years. The cost of switching only goes up as you build more models and processes around a particular platform.

Conclusion

Selecting an MLOps platform is about more than features—it's about enabling your team to deliver real business impact through machine learning. The ideal platform should become nearly invisible, removing obstacles rather than creating new ones.

While MLflow Enterprise stands out as a versatile option for most teams in 2025, your specific cloud ecosystem (AWS, Azure), team composition, and data requirements should guide your choice. Start with a focused use case rather than attempting wholesale transformation, and prioritize platforms that can evolve with the rapidly changing ML landscape.

Remember that technology is only half the equation—organizational approach and team capabilities are equally crucial for success. The best MLOps investment is one that aligns with both your current needs and future machine learning ambitions.