Implementing a Convolutional Neural Network with Python

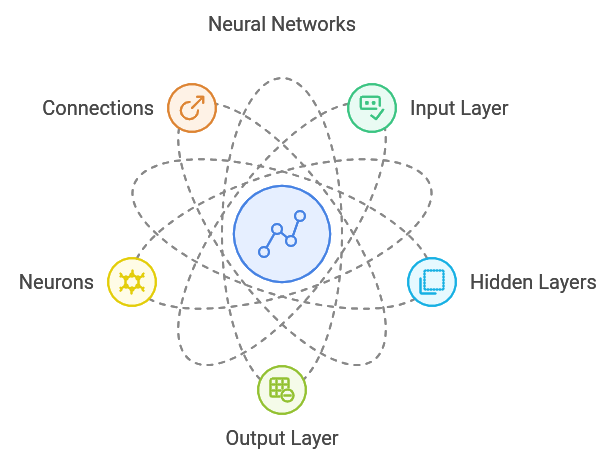

What is a Neural Network

- A neural network is a type of machine learning model designed to make decisions in a way that resembles the human brain. It does this by simulating biological neurons, enabling the network to learn patterns, analyze data, and arrive at conclusions.

- By connecting layers of artificial neurons, a neural network can detect complex relationships, weigh different inputs, and refine its accuracy over time, making it a powerful tool for tasks such as image recognition, language processing, and predictive analytics.

What is CNN

- A convolutional neural network (CNN) is a specialized type of artificial neural network primarily used for image recognition and processing.

- By leveraging convolutional layers, CNNs are particularly effective at identifying patterns and features within images, making them ideal for tasks like object detection, facial recognition, and visual classification.

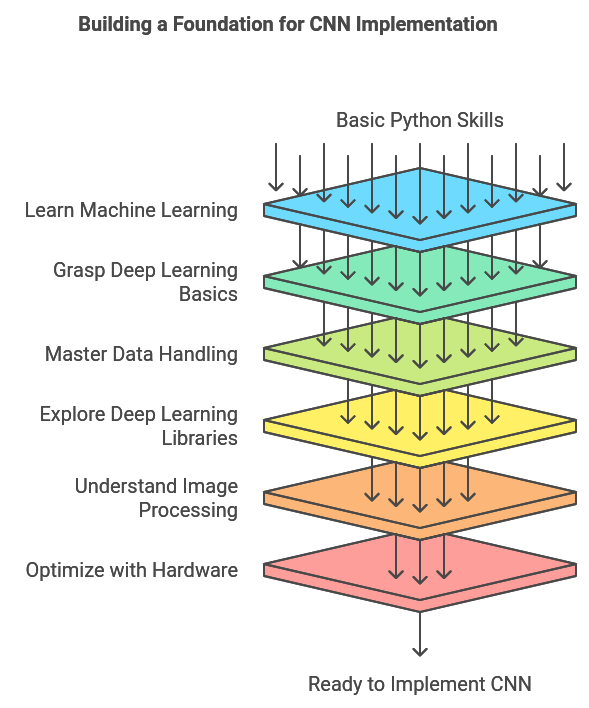

Prerequisites to implement CNN

1. Basic Python Programming

- Familiarity with Python syntax, data structures (like lists and dictionaries), and object-oriented programming is essential.

2. Machine Learning Fundamentals

- Basic knowledge of machine learning concepts, such as supervised learning, training, validation, and test data splits, as well as overfitting and regularization.

3. Deep Learning Basics

- Familiarity with neural network structures, backpropagation, and activation functions (like ReLU and sigmoid).

- Understanding of key CNN concepts, such as convolution, pooling, stride, padding, and the architecture of typical CNN layers.

4. Python Libraries for Data Handling and Visualization

- NumPy: Essential for handling matrix operations and numerical computations.

- Pandas: Useful for data manipulation and preprocessing.

- Matplotlib/ Seaborn: For visualizing data, including training results and performance metrics.

5. Deep Learning Libraries

- TensorFlow or PyTorch: These frameworks provide high-level APIs for building and training CNNs, with functions for defining layers, optimizers, and loss functions.

- Keras: A high-level API for TensorFlow that simplifies CNN architecture implementation and training.

6. Image Processing Knowledge

- Familiarity with image processing basics, such as understanding pixels, RGB values, and image transformations (resizing, normalization).

- OpenCV or PIL (Python Imaging Library) can be helpful for preprocessing images before feeding them into a CNN.

7. GPU/Hardware Acceleration Setup (Optional but Recommended)

- Setting up CUDA and cuDNN (for NVIDIA GPUs) to leverage hardware acceleration can significantly speed up training for large datasets.

8. Basic Knowledge of Optimization Techniques

- Understanding optimization algorithms like stochastic gradient descent (SGD), Adam, and techniques like learning rate scheduling and batch normalization for better performance tuning.

Basics

Before diving into the steps, let’s quickly review the basics:

What is tensorflow?

It's an open-source machine learning library developed by Google, Used for Building and training neural networks, Performing complex math computations as well as developing deep learning models Tensorflow has some key features like it has GPU acceleration support

What is matplotlib?

A comprehensive plotting and visualization library, used for creating static, animated and interactive visualizations and plotting data in various formats(line plots, scatter plots, histograms, etc.)

What is numpy?

Package for numerical computing in python. It has core features like it can take multi-dimensional array objects, can compute advanced mathematical functions as well as linear algebra operations

What is Keras dataset?

Keras Dataset is a part of the Keras deep learning library(now integrated into tensorflow) It gives collection of preloaded datasets for ML such as

- MNIST(handwritten digits)

- CIFAR-10 and CIFAR-100(Object Recognition)

Steps

Step 1: Train the model

(training_images, training_labels), (testing_images, testing_labels) = datasets.cifar10.load_data()

training_images, testing_images = training_images / 255, testing_images / 255

class_names = ['Plane', 'Car', 'Bird', 'Cat', 'Deer', 'Dog', 'Frog', 'Horse', 'Ship', 'Truck']

for i in range(16):

plt.subplot(4, 4, i+1)

plt.xticks([])

plt.yticks([])

plt.imshow(training_images[i], cmap=plt.cm.binary)

plt.xlabel(class_names[training_labels[i][0]])

# Reducing the Dataset Size (for Quick Training)

training_images = training_images[:30000]

training_labels = training_labels[:30000]

testing_images = testing_images[:5000]

testing_labels = testing_labels[:5000]

# Train the CNN

model = models.Sequential()

model.add(layers.Conv2D(32, (3, 3),activation='relu', input_shape=(32, 32, 3)))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), activation='relu'))

model.add(layers.Flatten())

model.add(layers.Dense(64, activation='relu'))

model.add(layers.Dense(10, activation='softmax'))

model.compile(optimizer='adam',loss='sparse_categorical_crossentropy', metrics=['accuracy'])

model.fit(training_images, training_labels, epochs=10,validation_data=(testing_images, testing_labels))

loss, accuracy = model.evaluate(testing_images, testing_labels)

print(f'Loss: {loss}')

print(f'Accuracy: {accuracy}')

model.save('image_classifier.model')1. Loading and Preprocessing Data

datasets.cifar10.load_data() loads the CIFAR-10 dataset, containing 60,000 images across 10 classes.

training_images, training_labels: Arrays of 50,000 training images and their labels.

testing_images, testing_labels: Arrays of 10,000 test images and their labels.

Each image pixel value is divided by 255 to normalize the data into a 0-1 range, which helps improve model training.

2. Displaying Sample Images

class_names lists the names of the CIFAR-10 classes.

The code plots the first 16 images from the training set in a 4x4 grid with plt.imshow, displaying each image's corresponding label as plt.xlabel.

3. Building the CNN Model

models.Sequential()creates a linear stack of layers. Each layer is added one by one in sequence, where the output of one layer is fed as the input to the next.- Adding Layers: Convolutional, Pooling, and Dense Layers

- Convolutional layers (Conv2D): Extract spatial features from images.

- Pooling layers (MaxPooling2D): Reduce spatial dimensions to control model complexity and capture dominant features.

- Dense layers (Dense): Fully connected layers that learn the final classification.

4. First Convolutional Layer:

model.add(layers.Conv2D(32, (3, 3), activation='relu', input_shape=(32, 32, 3)))

- Purpose: This layer is designed to detect features from the images by applying filters that slide across the image.

- Parameters:

32: The number of filters (kernels) used, each generating a 2D activation map.(3, 3): The size of each filter, a 3x3 grid.activation='relu': Uses the Rectified Linear Unit (ReLU) activation function, which helps introduce non-linearity by setting any negative values to zero.input_shape=(32, 32, 3): Specifies the shape of each input image (32x32 pixels, with 3 color channels - RGB).

5. First Max Pooling Layer

model.add(layers.MaxPooling2D((2, 2)))

Purpose: Reduces the spatial dimensions of the feature maps by a factor of 2 (in both height and width).

Parameters:

(2, 2): The pooling size, which takes the maximum value in each 2x2 block, effectively downsampling the feature map.

6. Repetition of Layers

- Same as step 4, two more Convolutional layers are added

- same as step 5, one more Max Pooling Layer is added.

7. Flatten Layer

model.add(layers.Flatten())

8. First Dense Layer

model.add(layers.Dense(64, activation='relu'))

- 64: The number of units or neurons in this layer.

- activation='relu': Introduces non-linearity.

9. Output Layer (Dense Layer with Softmax Activation)

model.add(layers.Dense(10, activation='softmax'))

10: The number of neurons corresponds to the number of target classes.activation='softmax': The softmax activation function converts the output scores into probabilities for each class. The class with the highest probability is selected as the model’s prediction.

10. Compiling the Model

model.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['accuracy'])

optimizer='adam': Uses the Adam optimizer, a popular choice because it adapts the learning rate during training.loss='sparse_categorical_crossentropy': The loss function used here is suitable for multi-class classification with integer labels.metrics=['accuracy']: Tracks accuracy during training and evaluation.

11. Model Training

model.fit(training_images, training_labels, epochs=10, validation_data=(testing_images, testing_labels))

training_imagesandtraining_labels: The input images and their corresponding labels for training.epochs=10: The number of complete passes through the training dataset.validation_data=(testing_images, testing_labels): Specifies the validation dataset to monitor the model's performance on unseen data during training.

12. Save the model:

model.save('image_classifier.model')

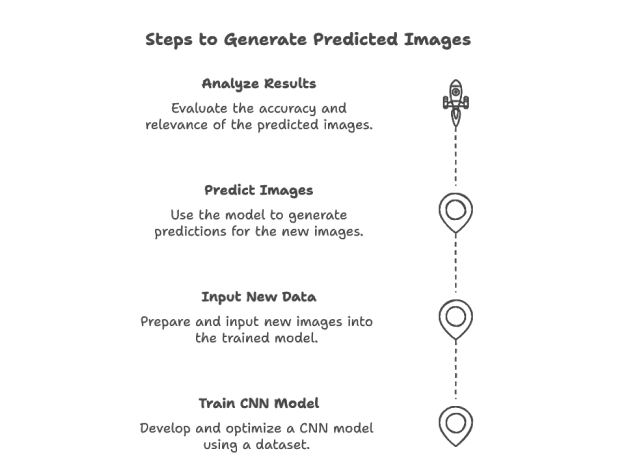

Step 2: Predicting the Image

# Analyze the image

def checkImage(image):

class_names = ['Plane', 'Car', 'Bird', 'Cat', 'Deer', 'Dog', 'Frog', 'Horse', 'Ship', 'Truck']

model = models.load_model('image_classifier.model')

prediction = model.predict(np.array([image]) / 255)

index = np.argmax(prediction)

return class_names[index]How predicting the image works?

Conclusion

In this blog, we've walked through implementing Convolutional Neural Networks using Python and essential libraries like TensorFlow, NumPy, and Matplotlib. We covered everything from data preparation to model training and evaluation. CNNs are powerful tools for image processing tasks, and with the right implementation strategy, they can achieve remarkable results. Whether you're working on image classification, object detection, or other computer vision tasks, these fundamental concepts will serve as your building blocks for more advanced applications.