Building High-Performance Machine Learning Models in Rust

Introduction

Rust is gaining popularity in the machine learning (ML) ecosystem due to its focus on performance, safety, and concurrency. While Python remains dominant, Rust offers compelling advantages for high-performance ML applications, particularly for real-time inference, embedded systems, and performance-critical workloads.

This blog explores how to build high-performance ML models in Rust, discussing key libraries, optimization techniques, and integration with existing ML frameworks.

Why Choose Rust for Machine Learning?

.png&w=1920&q=75)

1. Performance Without Sacrifices

Rust provides C/C++-level performance without sacrificing memory safety. Its zero-cost abstractions principle means you can write high-level code that compiles down to efficient machine instructions. For computationally intensive ML tasks, this translates to faster training and inference times.

2. Memory Safety Guarantees

Rust's ownership model and borrow checker eliminate entire classes of bugs like null pointer dereferencing, use-after-free, and data races - critical for production ML systems handling sensitive data or making important decisions.

3. Seamless Integration

Rust can easily interface with C/C++ libraries through its Foreign Function Interface (FFI), allowing you to leverage existing high-performance ML libraries while gradually migrating to native Rust implementations.

4. Parallelism Without Fear

Rust's ownership model makes concurrent programming much safer. This is particularly valuable for ML workloads that benefit from parallelization across multiple cores or machines.

Key Rust Libraries for Machine Learning

.png&w=1920&q=75)

1. ndarray

- Provides efficient multi-dimensional arrays and mathematical operations, similar to NumPy.

- Supports broadcasting, slicing, and parallel computations.

2. Rust Machine Learning (Linfa)

- A modular ML framework with algorithms for classification, clustering, and regression.

- Inspired by Python’s scikit-learn.

3. Tch-rs (Torch for Rust)

- Rust bindings for PyTorch’s C++ backend (LibTorch).

- Enables training and inference using PyTorch models with Rust’s performance benefits.

4. Autumn

- A deep learning library designed for performance and ease of use.

- Supports GPU acceleration with CUDA.

5.BURN

- A modular, extensible ML framework for Rust.

- Aims to provide GPU acceleration, automatic differentiation, and deep learning capabilities.

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | shCreate a new Rust Project:

cargo new rust-ml

cd rust-mlAdd dependencies in Cargo.toml

[dependencies]

ndarray = "0.15"

linfa = "0.7"

linfa-logistic = "0.7"Step 2: Implementing a Simple Logistic Regression Model

use linfa::prelude::*;

use linfa_logistic::LogisticRegression;

use ndarray::{Array2, Array1};

fn main() {

// Create dataset (X: features, y: labels)

let x = Array2::from_shape_vec((4, 2), vec![1.0, 2.0, 2.0, 3.0, 3.0, 3.5, 5.0, 5.0]).unwrap();

let y = Array1::from_vec(vec![0, 0, 1, 1]);

// Train logistic regression model

let model = LogisticRegression::new().fit(&Dataset::new(x, y)).unwrap();

// Predict on new data

let test_x = Array2::from_shape_vec((1, 2), vec![4.0, 4.5]).unwrap();

let prediction = model.predict(&test_x);

println!("Predicted class: {:?}", prediction);

}Step 3: Running the Model

Compile and run the project:

Crago runOptimizing ML Performance in Rust

1. Leverage SIMD and Parallelism

Use the rayon crate for parallel computations:

[dependencies]

rayon = "1.7"Example usage:

use rayon::prelude::*;

let data: Vec<f64> = (0..1000000).map(|x| x as f64).collect();

let sum: f64 = data.par_iter().sum();2. Use Efficient Data Structures

- Prefer

ndarrayover nestedVec<Vec<T>>for numerical operations. - Utilize

smallvecfor performance-sensitive vector operations.

3. Optimize Memory Usage

- Use stack allocation where possible (

arrayveccrate). - Minimize unnecessary data copies via Rust’s borrowing mechanism.

Deploying ML Models in Rust

1. Convert Models from Python to Rust

Train in Python (TensorFlow/PyTorch), export to ONNX.

Load in Rust using

ort(ONNX Runtime) crate:

[dependencies]

ort = "1.15"

use ort::{Environment, Session};

fn main() {

let env = Environment::builder().build().unwrap();

let session = Session::builder(&env).with_model_from_file("model.onnx").unwrap();

println!("ONNX Model Loaded!");

}2. Use Rust for Real-Time Inference

- Rust’s speed and low latency make it ideal for edge computing and web applications.

- Use WebAssembly (

wasm-bindgen) to deploy Rust ML models in the browser.

Challenges and Solutions

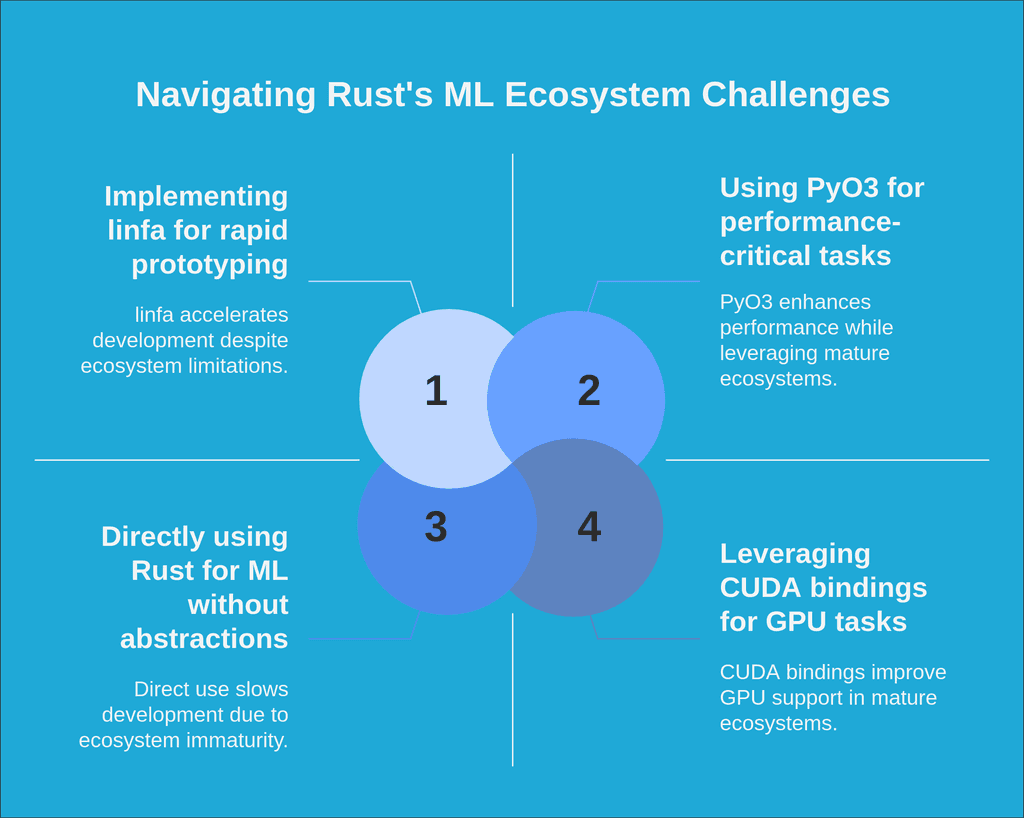

1. Challenge 1: Ecosystem Maturity

Problem: Rust's ML ecosystem is less mature than Python's.

Solution: Use Rust for performance-critical components while interfacing with established libraries through FFI or PyO3.

2. Challenge 2: Development Speed

Problem: Stricter type system and ownership rules can slow down initial development.

Solution: Use higher-level abstractions like linfa for common ML tasks, and reserve low-level optimizations for critical paths.

3.Challenge 3: GPU Support

Problem: GPU support in Rust is still evolving.

Solution: Use bindings to established frameworks like CUDA or leverage WebGPU through libraries like wgpu.

Conclusion

Rust presents a compelling option for high-performance machine learning applications, particularly where performance, safety, and deployment efficiency are priorities. While the ecosystem is still maturing, the foundations are strong, and the language's inherent advantages make it well-suited for production ML workloads.

As the ecosystem continues to evolve, Rust is poised to become an increasingly important player in the ML landscape, offering an excellent alternative when Python's performance limitations become apparent.

For those looking to get started with ML in Rust, focusing on specific performance-critical components while maintaining interoperability with existing Python codebases offers the best path forward in the near term.